Executive summary

Better early identification of students struggling with mathematics is a critical step in addressing underachievement

- Evidence shows virtually all students can reach proficiency in mathematics, if they receive systematic and high-quality instruction. But data from national and international testing shows too many Australian students are not meeting proficiency benchmarks. Those who fall behind often do so early in their school experience and rarely catch up.

- Successive reviews have advocated for better assessment tools for early identification of students at risk and subsequent intervention. In particular, screening tools that are administered to all students can ‘flag’ students who are at-risk of later difficulties with mathematics without additional support. For students needing additional support, the chances of positive outcomes are significantly higher when intervention is early and evidence-based.

- For intervention outcomes to be improved, a universal and systematic approach is needed for the early years of school. Effective early maths screening — particularly through a universal numeracy screener in Year 1 — could improve the opportunity for Australian students to be confident and successful in the subject.

Effective early screening measures should focus on robust models of number sense

- There are several early markers of students’ likelihood to experience difficulty in mathematics, including malleable skills such as ‘number sense’.

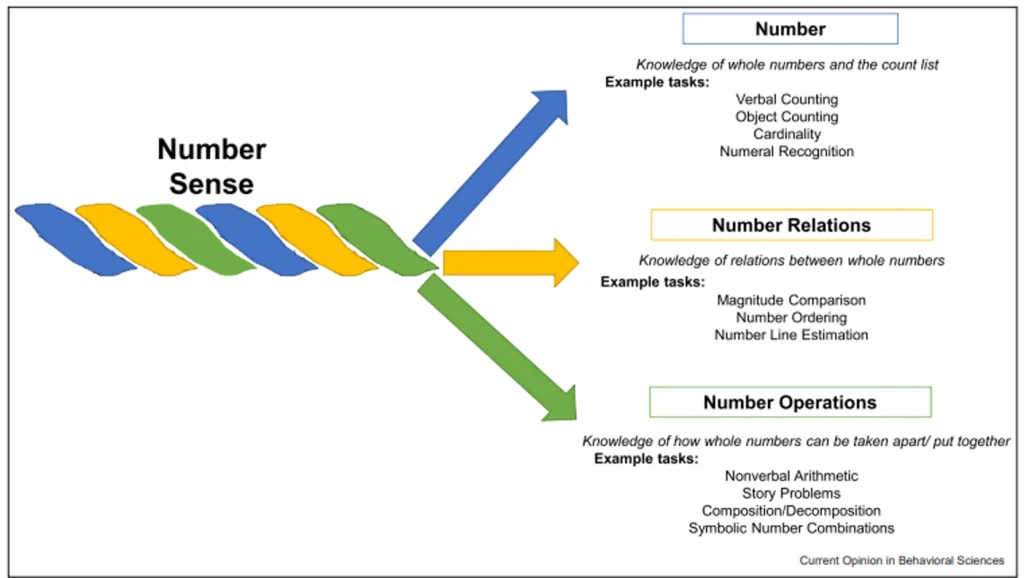

- Number sense represents a body of core knowledge about whole numbers, which predicts mathematics achievement and underlies the development of more complex mathematical skills and knowledge. Number sense encompasses the three domains of number (including saying, reading, and writing numbers), number relations (comparing and understanding numbers in terms of ‘more’ and ‘less’) and number operations (understanding and facility with addition and subtraction).

- Number sense is ‘teachable’ and students who receive quality early interventions in number sense can experience significant and lasting benefits.

- However, awareness of, and screening for, these key foundational skills is not systematically implemented in Australian schools. This means students at risk are not consistently identified early enough to maximise their chance of success.

Current student assessments in Australia do not meet adequate standards for universal screening

- Evidence shows effective maths screening approaches have some characteristics in common. Mathematics screeners must be efficient, reliable and directly inform teaching practice. Importantly, they must be designed to reflect research about the skills and knowledge that are most predictive of future maths success, so the right children are identified for additional support. Screening tools must classify children as ‘at-risk’ or ‘not at-risk’ with acceptable accuracy to enable support to be appropriately allocated to where it is needed.

- However, current approaches to early mathematics assessment do not represent an efficient or effective approach to ‘screening’. Tools currently in use are largely diagnostic in nature or measure achievement rather than risk. Such tools are important within a broad approach to assessment but were not designed and are not suitable for screening purposes.

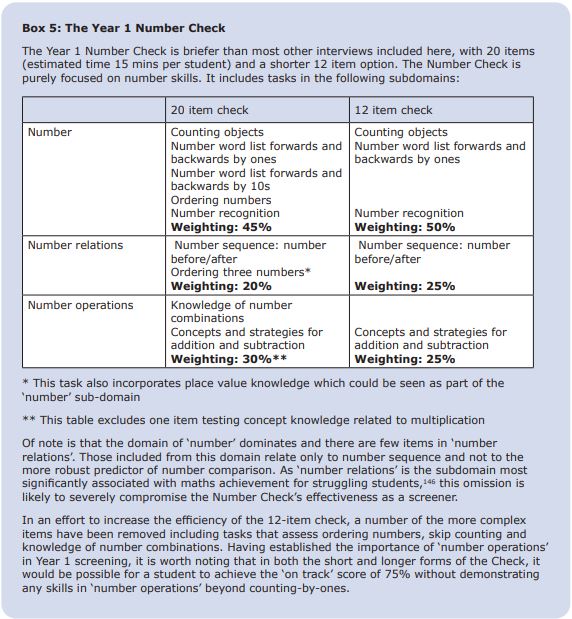

- The Year 1 Number Check, developed in response to previous recommendations for a consistent screening tool based on number sense in Year 1, is not widely used or fit for purpose in its current form. A new or significantly redesigned tool is needed which accurately represents the skills with predictive value in Year 1, is based on a robust model of what constitutes ‘number sense,’ and which measures not only knowledge and strategies but fluency with that knowledge. This tool should be research-validated to ensure its accuracy in identifying risk.

Policymakers should take action to widely implement effective screening and intervention

- Policy makers should implement a research-validated, nationally-consistent screening tool which measures aspects of the three domains of ‘number sense,’ consistent with the established research base.

- Screening tools designed on a conceptual model of ‘number sense’ should be developed for both Foundation and Year 1, and implemented with all students at least two times per year (beginning and middle of year).

- The second testing period in Year 1 should be consistent across all Australian schools and used for central data collection.

- A final testing period towards the end of Term 4 should involve a standardised test of maths achievement. This can help schools to evaluate how successful the teaching program has been and track students’ progress over time as they move through Primary School.

- Teachers and schools should be supported with professional learning programs to enable more intensive teaching for at-risk students. Systems should provide access to evidence-based tools for intervention, and the resources with which to deliver these to students identified through screening.

- Maths screening should occur within a multi-tiered framework which includes systematic processes for assessment and instruction at three tiers. Existing tools should be realigned to this framework, and progress monitoring tools developed.

- Early screening and intervention is necessary but not sufficient for some students to maintain pace with grade-level curriculum. Systematic screening and intervention resources and processes are also needed for middle and upper grades.

Introduction

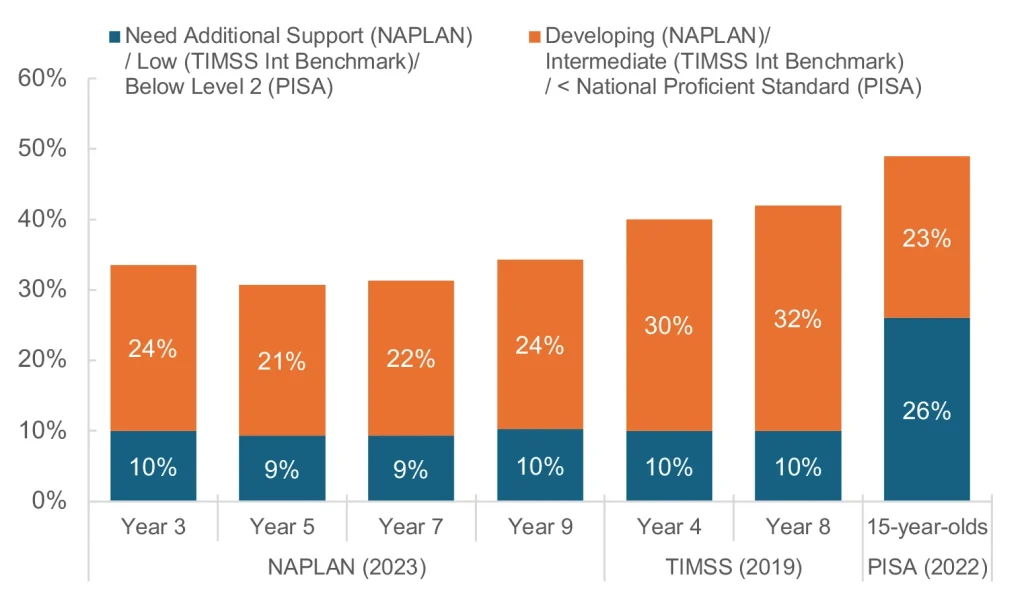

International data have repeatedly shown many Australian school students struggle with mathematics. Around 10% of students achieve at a level that requires additional support (NAPLAN) or are below the international benchmark Trends in International Maths and Science Study (TIMSS) — which is the equivalent of around 400,000 Australian students per year. More than a quarter of 15-year-olds are low performers in the subject (see Figure 1).

Figure 1: Proportion of Australian students achieving at levels below proficiency in domestic and international tests of numeracy and mathematical literacy

Australian students’ maths proficiency has at best stagnated (TIMSS, 2019)[1] — and at worst declined — both in absolute terms and compared to their overseas peers (Programme for International Student Assessment (PISA), 2022).[2] Over the most recent period of PISA testing (2018-2022), the gap between Australia’s lowest and highest achieving students continued to widen and is among the largest in developed countries in the world.

This cannot be attributed to a lack of money or instructional time. Australia spends around 23% more per student per year than the OECD average and requires the highest number of compulsory instructional hours in general education in the OECD.[3] It is abundantly clear that money alone is not the answer, and time spent in class does not necessarily equate to time spent well.

Students who struggle with maths can be identified early

Students who enter formal schooling with numeracy skills behind their peers rarely catch up, and mathematical risk factors can be evident much earlier than formal testing in school.[4] [5] [6] Students from disadvantaged backgrounds enter school with significantly lower number knowledge,[7] and are more likely to have slower rates of growth in their mathematical knowledge.[8]

Research shows that students’ pathways to post-secondary education are related to maths skills as early as age 4,[9] and can be predicted by their mathematics achievement in Year 5.[10] School mathematics achievement also has striking implications for life beyond formal schooling. Adults with poor numeracy have lower rates of employment, income, higher rates of homelessness and poorer health outcomes.[11] [12] [13] It is estimated that around 1 in 5 adults do not have the numeracy levels required to successfully complete daily tasks such as reading a petrol gauge or managing a household budget.[14]

Research from the Productivity Commission and Australian Education Research Organisation (AERO) confirm the persistence of early difficulties with later academic skills. Basic literacy and numeracy skills upon school entry (as measured by the Australian Early Development Census) are strongly predictive of NAPLAN achievement in Year 3.[15] Students who perform poorly on NAPLAN in Year 3 are at high risk of continued poor performance throughout their schooling, with only one in five managing to later attain and maintain proficient levels of performance.[16]

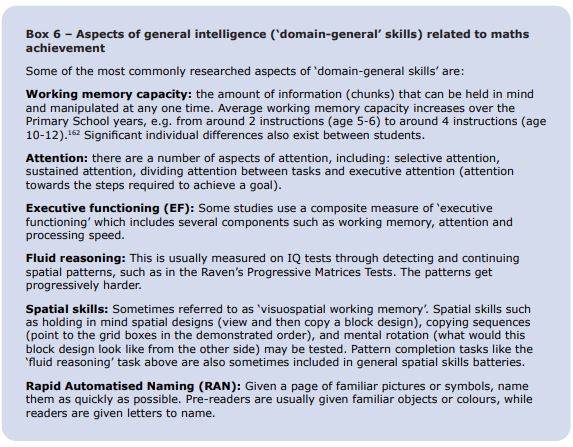

Differences in early maths proficiency are associated with lower socio-economic status and with individual differences in cognitive abilities including working memory (the general capability of keeping small amounts of information active and accessible)[17] and attention. However, the quality of school preparation and instruction is a significant contributor to students’ outcomes.[18]

Lack of access to high-quality universal early numeracy screening

Screening in the initial years of schooling is an essential component of a coordinated response to increasing achievement in mathematics. By the time struggling students are identified by NAPLAN in Year 3, precious time has been lost and learning gaps become more challenging, expensive and unlikely to bridge.[19] [20] However, despite recent interest in early screening and intervention for numeracy difficulties, Australian schools and teachers routinely lack access to reliable tools and processes for identifying students at risk of later failure in school mathematics.

The need for consistency and rigour in such processes was recognised in 2017 by the National Advisory Panel for the Year 1 Literacy and Numeracy Check,[21] which recommended the development and use of a nationally consistent tool. This need was reinforced more recently by the Better and Fairer Review.[22]

Sadly, over the course of these six years, little change in practice and supporting policy has been implemented. As a result, current tools available to Australian schools are not designed for, or well suited to, universal screening procedures as recommended by Better and Fairer Review).

Intervention outcomes have been mixed

Intervention outcomes have been mixed in practice, in part because they have not benefitted from high-quality universal screening. Policymakers have recognised the need to support students who are struggling or at risk of not meeting expected achievement levels. Interruptions to schooling due to the covid-19 pandemic resulted in the rollout of around a billion dollars in small group tutoring initiatives in NSW and Victoria to better support struggling students. Education ministers have further signalled the ambition to scale up such programs.

However, this willingness to better support struggling students through intervention has not been matched with improvements in valid and reliable ways to identify struggling students who would best benefit from targeted additional support. Namely, independent evaluations of government-run initiatives, while well intentioned, show that they failed to guarantee evidence-based approaches to assessment and intervention, and, as a result, unfortunately achieved no impact in improving student outcomes.[23]

Access to reliable data about who is likely to struggle in maths and what support they might need will help schools and teachers to intervene early and deliver more effective, targeted intervention. Despite the obvious need, approaches to early maths screening in Australian schools have changed little in the past 20 years, despite international research which has revealed much about how to gather such data in effective and efficient ways.

This report will examine research findings on effective screening processes for mathematics difficulties, and how current practices in Australia align with and could be improved by using the most recent scientific research into preventing and addressing numeracy difficulties.

The role of early mathematics screening

Detecting and addressing difficulties early can demonstrably impact achievement, anxiety, motivation and participation in mathematics, both in the short and longer term.

By identifying students who struggle early, and delivering high quality educational interventions, it is possible to alter patterns of underachievement.[24] [25] Providing quality educational interventions early in students’ schooling can raise achievement.[26] [27] In addition, students who master foundational skills in maths are better prepared to grapple with the more complex ideas presented in the later school years.

Much has been made of the issues in attracting students, particularly girls, into STEM subjects and fields. Anxiety around maths is often touted as the reason so many of these students avoid pursuing maths subjects and careers.[28]

Raising motivation and engagement have therefore been the focus of considerable effort and investment to address this issue by successive governments. It is certainly true that there is a complex relationship between issues around confidence, anxiety, self-concept, motivation and achievement.

However, recent research has revealed successful early mathematical experiences may hold the key. According to a recent CIS report from Professor David Geary:[29]

“Students who experience early difficulties with maths are more likely to suffer from maths anxiety, rather than the other way around.”

Hence, early difficulties are often the catalyst for a cycle of mathematics anxiety, poor motivation and underachievement. Therefore, effective mathematical interventions are likely to have flow-on effects not just to mathematical achievement, but also to motivation and interest — which influence choices about pursuing mathematics-related courses and careers. This is particularly the case for females, who disproportionately experience maths anxiety and are consequently underrepresented in secondary mathematics courses and mathematics-related careers.[30]

Figure 2: Representation of the failure cycle applied to mathematics

Students’ self-perceptions of whether they are any good at maths, known as self-efficacy, are a key factor in these decisions.[31] [32] It is hardly surprising that students who are anxious about maths and perceive themselves to be poor at maths will avoid mathematics subjects and maths-related careers. What is surprising is how early this negative spiral of poor achievement, low motivation, and poor self-efficacy becomes established [33] [34](see Figure 2).

In other words, the weight of evidence suggests that achievement creates motivation and engagement and reduces maths anxiety, rather than the other way around. Fortunately, by intervening early the impact of this destructive ‘feedback loop’ can be lessened. The importance of early intervention, therefore, cannot be over-emphasised. Students must receive mathematics support early and experience success with mathematics.

What tools are Australian schools using

Australian schools are well aware of the need to gather information about students’ early mathematical abilities to inform instruction. The primary methods by which systems currently collect this data is through individual interviews and standardised testing.

Most systems invest considerable amounts of time and money funding individual interview-style assessments in the early years of school. To conduct such interviews, relief teaching staff are usually employed to release classroom teachers who sit down with individual students and observe their responses to particular mathematical tasks. See Table 1 for an overview of commonly used assessment tools in early mathematics.

* Mandated use by system

Two sectors in two different states indicated that they recommend their teachers use the Year 1 Number Check, developed by the federal government in response to the National Year 1 Literacy and Numeracy Check: Report of the Expert Advisory Panel.[35] See Box 1 for a brief description of the Year 1 Number Check.

The mathematics interview

Mathematics interviews have a long history in mathematics education, dating back to the research of Piaget in the early 1960s. Most, if not all, teachers would be familiar with Piaget’s ‘Stage Theory’ of cognitive development. This theory was derived through task-based clinical interviews in which children engaged with mathematical tasks while the researcher observed and asked questions to probe mathematical thinking and reasoning. Despite the subsequent inaccuracies uncovered in Piaget’s conclusions, clinical interviews are still highly valued by mathematics education researchers as a window into students’ thinking.

The clinical interview for mathematics has its origins in the traditions of cognitive science. In cognitive science, the focus is not on observable behaviour, but on uncovering the cognitive processes which underlie that behaviour. As Piaget expressed it:[37]

“Now from the very first questionings I noticed that though [the standardised] tests certainly had their diagnostic merits, based on the numbers of successes and failures, it was much more interesting to try to find the reasons for the failures. Thus I engaged my subjects in conversations patterned after psychiatric questioning, with the aim of discovering something about the reasoning process underlying their right, but especially their wrong answers. I noticed with amazement that the simplest reasoning task … presented for normal children … difficulties unsuspected by the adult.”

Researchers have learned much about children’s mathematical thinking through interview techniques. For example, it was through clinical maths interviews that Rochel Gelman and Randy Gallistel formulated their theory about the five principles that govern children’s successful counting of collections.[38]

Interviews are particularly favoured in approaches which aim to measure children’s progress along a trajectory of development in their thinking. The interview is the means by which teachers establish where the child is on that trajectory, and what they need to learn next.

For example, presented with the task, “I have 3 buttons and Mum gives me 6 more – how many do I have now?” there are a number of ways a child could represent and solve this problem. With access to counters (or indeed, fingers), a very young child could count out the 3 items, count out the 6 items, and then count the whole collections starting from one: 1, 2, 3… 7, 8, 9. A more advanced child might recognise they are able to start counting from the first collection, and count up to add the second collection: 3… 4, 5, 6, 7, 8, 9. More advanced still is the child who recognises that it is more efficient, and equally valid, to count up from the larger number: 6… 7, 8, 9. A further strategy would be to retrieve the known fact 3+6 from memory and know that the answer is “9” without any counting at all.

In perhaps the first comprehensive maths interview developed in Australia, Bob Wright and colleagues developed a number of assessment schedules based on such a framework: the Learning Framework in Number.[39] These interviews became the bedrock of the Mathematics Recovery intervention program for struggling Year 1 and 2 students.[40] Mathematics Recovery was then adapted in the design of Mathematics Intervention which focused on at-risk Year 1 students.[41]

Inspired by this approach, in the following decades, large-scale projects based on understanding mathematical learning trajectories through diagnostic interviews were conducted in New South Wales (Count Me in Too), Victoria (Early Years Numeracy Research Project), and New Zealand (Numeracy Development Project). The success of these projects in raising teacher knowledge about mathematical development was largely attributed to the use of the interviews and the trajectories upon which they were based.[42] [43] The Early Years Numeracy Research Project (EYNRP) further demonstrated that this knowledge could have an impact on students’ learning, by moving them further along the trajectory when compared to peers outside the project.[44] The intervention program Extending Mathematical Understanding (EMU)[45] was developed as an intensification of the EYNRP for at-risk students.

Most individual mathematics interviews in use in Australia today are derived from these earlier projects. In particular, the Early Numeracy Interview (ENI) developed for the Early Years Numeracy Research Project[46] was revised and expanded to become the Mathematics Assessment Interview (MAI)[47] which was then adapted for online delivery and renamed the Mathematics Online Interview for use in the Victorian Education system. The On-Entry Assessment – Numeracy used in Western Australia at the beginning of the Pre-Primary year (Foundation – Module 1) and Year 1 (Module 2) is also based on the ENI.

Standardised achievement testing

The school assessment landscape has changed considerably in the years since Count Me In Too and the Early Numeracy Research Project were active in schools. One significant change was the introduction of NAPLAN testing in 2008, after which the need to have a measurable way to monitor student progress through the years became a more pressing issue for schools. Education sectors in the ACT, NT, SA, Tasmania and WA indicated that their schools were required or encouraged to administer annual standardised mathematics achievement tests, most commonly ACER’s Progressive Achievement Test in Mathematics (PAT Maths).

PAT Maths aims to measure student achievement across all three strands of the mathematics curriculum (number and algebra; measurement and geometry and statistics and probability) and encompass all proficiency strands (fluency, understanding, problem-solving and reasoning).[48]

Standardised measures of achievement are generally acknowledged to be important for tracking students’ progress over time and prompting important discussions about student learning and educational policy.[49] [50] Some standardised achievement measures aim to provide extremely detailed information about individual students’ learning needs and involve individual administration over extended periods of time. However, the extent to which broad measures of curriculum attainment (e.g. PAT Maths, NAPLAN) are used by teachers to effectively inform instruction is less clear.[51]

Methods for early and universal maths screening

Teachers and schools need reliable data about who is struggling and what support they might need. However, this data must not come at an undue cost to instructional time. Therefore, there is a delicate balance to be struck between using tools that gather just enough data, just in time to be useful in informing support decisions.

The Better and Fairer Review[52] recognised the imperative that “students who start school behind or fall behind are identified as early as possible so they can receive targeted and intensive support” (p.57), and recommended the adoption of universal screening as a component of MTSS. Increased consistency around screening processes in Foundation and Year 1 was recognised as an important first step.

Having established that teachers, schools and systems recognise the need for screening, it is necessary to consider what it is and how it differs from other forms of assessment. Australian schools already use a significant number of assessment processes in maths, but these processes are currently not preventing large numbers of children from falling behind and staying there.

Universal screening is better suited than diagnostic assessment for identifying students at risk

Universal screening, rather than diagnostic assessments, is required for effective identification of students in need of additional support. The value of universal screening has long been acknowledged internationally. In reviewing available research on supporting students with mathematical difficulties, the leading recommendation of the 2009 report of the Institute of Education Sciences[53] was that:

“…schools and districts systematically use universal screening to screen all students to determine which students have mathematics difficulties and require research-based interventions.”

The ‘universal screening’ paradigm was first developed in preventative medicine. Universal screening procedures in health have a long history of research and successful implementation and are a useful analogy for developing processes in education. According to the National Library of Medicine (USA):[54]

“Diagnostic tests are usually done to find out what is causing certain symptoms. Screening tests are different: they are done in people who do not feel ill. They aim to detect diseases at an early stage, before any symptoms become noticeable. This has the advantage of being able to treat the disease much earlier.”

Whereas the purpose of diagnostic assessment is to identify the potential causes (and by extension possible treatments) for specific known problems, the purpose of screening is to identify problems before they might be obvious to treatment providers or even patients themselves. Screening tests are administered across entire populations to determine who may be at-risk of an adverse outcome (in this case, poor achievement). For example, health systems schedule regular visits for new mothers and their babies to the child health nurse. At these visits, children are routinely weighed and measured and vital signs are collected. These measures are valid universal screenings that are used to signal a potential problem in development that merits further identification and possibly treatment efforts.

In other words, the purpose of screening is to identify which children need further assessment and possibly intervention. The purpose of diagnostic assessment is to figure out which intervention is needed. The reason year-end test scores are not effective universal screening devices is that year-end tests occur after instruction has been delivered and reflect whether that instruction was effective or not in hindsight. Screening measures, in contrast, are designed to be given midstream when intervention actions can be initiated to avoid or prevent failure on the year-end measure. Screening measures serve two important purposes in assessment: they are used to evaluate general programs of instruction for the purpose of program improvement and they are used to signify the need for intervention for most students, small groups of students, and individual students.

Therefore, the small distinction between ‘screening’ and ‘diagnostic’ assessments is an important one. Early identification through screening has also been a goal of reading research, although over a more extensive period of time, resulting in the design of efficient tools for identifying students at-risk which are then supplemented by more detailed diagnostic measures to inform intervention decisions. Many have suggested it is now time to apply what we have learned about screening in reading to screening for maths difficulties.

Early screening must be part of a systematic approach

In order to change students’ long-term learning trajectories and outcomes, we need to change the way schools identify and offer targeted support to those most at risk. There will be no magic panacea or ‘quick fix’ that will catch struggling students up and keep them achieving at the desired level. A complex problem requires a multi-tiered solution; specifically, evidence-based strategies and tools from the moment students walk into their first classroom and every year thereafter.

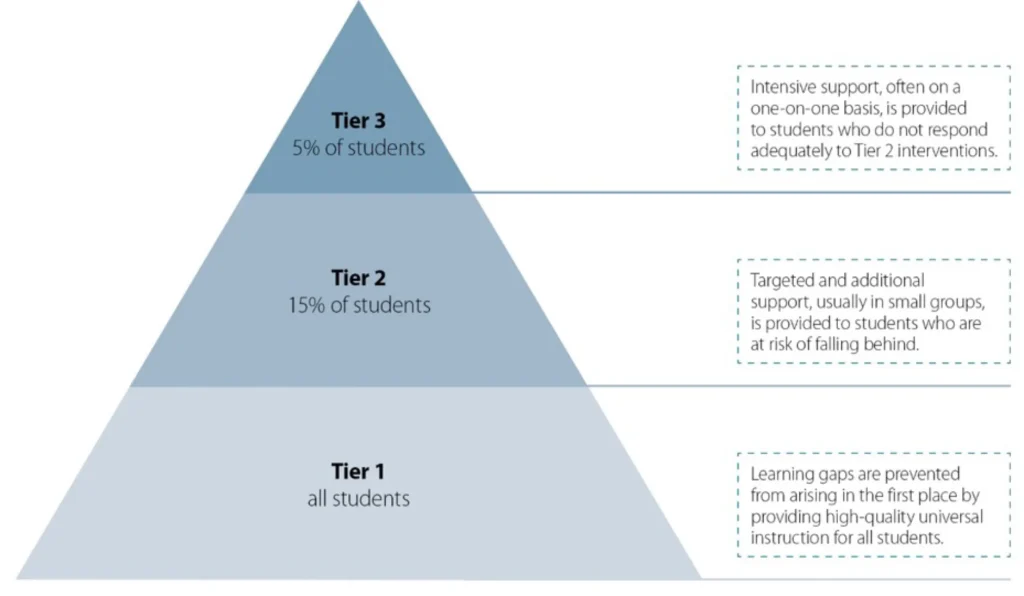

Many researchers and education systems around the world, including Australia, are now recognising the value of Multi-Tiered Systems of Support (MTSS) to ensure all students receive the level of support that will enable them to succeed. As asserted by the Better and Fairer Review,[55] “implementing a multi-tiered system of supports will lift achievement for all students” (p.54). In a recent report, the Australian Education Research Organisation recommended MTSS as the most effective framework for identifying and supporting struggling students in Australia.[56]

An MTSS gives an enabling context in which early screening and intervention can be successful in changing educational outcomes for students. Struggling students receive progressively more intensive support to ensure everyone’s needs are met. Support and resourcing is allocated on the basis of educational need and regardless of classification – socio-economic, disability, ethnic or otherwise. At each layer of intensity, instruction is informed by and monitored with targeted assessment tools. In the first instance, this assessment begins with an effective screening tool that can be used with whole classes of students to give reliable information about who needs extra support, and a starting point for what that support should look like. Figure 3 shows the three levels, or ‘tiers’ of cumulative support that are integral to MTSS. MTSS is described in more detail in Box 2.

Figure 3: MTSS model showing a coordinated system of increasingly intensive support offered in three ‘tiers’. Source: the Better and Fairer Review

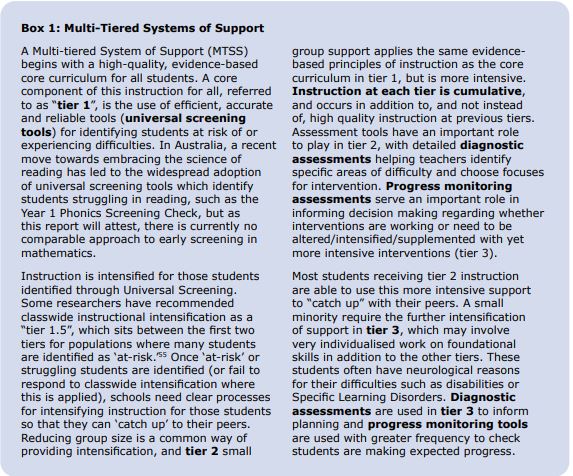

A Multi-Tiered System of Support (MTSS) begins with a high-quality, evidence-based core curriculum for all students. A core component of this instruction for all, referred to as “Tier 1”, is the use of efficient, accurate and reliable tools (universal screening tools) for identifying students at risk of or experiencing difficulties. In Australia, a recent move towards embracing the science of reading has led to the widespread adoption of universal screening tools which identify students struggling in reading, such as the Year 1 Phonics Screening Check, but as this report will attest, there is currently no comparable approach to early screening in mathematics.

Instruction is intensified for those students identified through Universal Screening. Some researchers have recommended classwide instructional intensification as a “Tier 1.5”, which sits between the first two tiers for populations where many students are identified as ‘at-risk.’[57] Once ‘at-risk’ or struggling students are identified (or fail to respond to classwide intensification where this is applied), schools need clear processes for intensifying instruction for those students so that they can ‘catch up’ to their peers. Reducing group size is a common way of providing intensification, and Tier 2 small group support applies the same evidence-based principles of instruction as the core curriculum in Tier 1, but is more intensive. Instruction at each tier is cumulative, and occurs in addition to, and not instead of, high quality instruction at previous tiers. Assessment tools have an important role to play in Tier 2, with detailed diagnostic assessments helping teachers identify specific areas of difficulty and choose focuses for intervention. Progress monitoring assessments serve an important role in informing decision making regarding whether interventions are working or need to be altered/intensified/supplemented with yet more intensive interventions (Tier 3).

Most students receiving Tier 2 instruction are able to use this more intensive support to “catch up” with their peers. A small minority require the further intensification of support in Tier 3, which may involve very individualised work on foundational skills in addition to the other tiers. These students often have neurological reasons for their difficulties such as disabilities or Specific Learning Disorders. Diagnostic assessments are used in Tier 3 to inform planning and progress monitoring tools are used with greater frequency to check students are making expected progress.

Existing early screening approaches based on Number Sense

At this time, examples of empirically validated screening measures focused on ‘number sense’ are found only overseas (a detailed description of number sense is provided later in this report). Although many effective screening tools include tasks aligned to some or all of the three subdomains of ‘number sense’, there are also examples in which a conceptual model of ‘number sense’ is central to the design of the screener as a whole.

One example of this is the Number Sense Screener (NSS)[58] for use in Kindergarten and early Grade 1, and the Screener of Early Number Sense (SENS) which has broader application across Preschool to Grade 1.[59] As these tools were developed and researched in the USA, Kindergarten in North American education systems could be considered equivalent to Foundation in Australia (pre- Year 1). The SENS acknowledges the different mix of competencies important in each grade level. Both screeners have shown good accuracy in classifying children as at-risk or not at-risk for mathematics difficulties, and are designed to channel children into intervention programs such as the associated Number Sense Interventions.[60]

The same framework informed the design of the Early Mathematical Assessment (EMA@School) from Carleton University which has been widely trialled in Canadian schools. Although the EMA has been trialled across Kindergarten (Australian equivalent to Foundation) to Grade 4, and used to identify children for intervention and to monitor their progress, data on reliability and validity is yet to be published.

The Early Numeracy Screener (ENS) applied a similar framework around core mathematics competencies for 5-8 year-olds to screen First Grade children for mathematics difficulty. This framework was structured around four core domains, with number knowledge separated into symbolic and non-symbolic number sense and counting skills.[61] The ENS was found to be a valid measure of early number competency and correlated with end-of-year national test results.[62] [63] While developed at the University of Helsinki in Finland, the screener and subsequent First Grade intervention showed promise when trialled in South Africa.[64]

Characteristics of effective screeners

There are a number of practical considerations when designing or choosing screening measures for broad use across large populations of students. As explained by the 2009 report of the Institute of Education Sciences:[65]

“Schools should evaluate and select screening measures based on their reliability and predictive validity, with particular emphasis on the measures’ specificity and sensitivity. Schools should also consider the efficiency of the measure to enable screening many students in a short time.” (p.13)

Screening tools should detect children at risk of mathematical failure, in ways that are reliable, accurate, and inform instructional decisions in clear and productive ways.

Reliability

The above quote reveals a number of important characteristics of screening tools. Firstly, scores must be reliable, meaning the tool gives consistent results that can be applied broadly across diverse populations. There are different forms of reliability; for example, test-retest reliability (a student tested on the measure is likely to receive a very similar score if they were to sit the test a week later), internal consistency (how well scores from a set of items relate to each other), alternate forms reliability (how well scores on different sets of items within the screener relate to each other) and inter-rater reliability (how similarly different scorers rate the same responses). Measures of early maths validated by research typically rate moderately-strong to strong for reliability; both in timed and untimed presentations.[66]

Predictive validity

Secondly, scores must have strong ‘predictive validity’, meaning they are a good indicator of later maths achievement. The following sections have an extensive review of those skills and competencies which show predictive validity for maths achievement.

Sensitivity and specificity

Specificity and sensitivity are important to ensure that scarce additional resources are allocated where they are actually needed — to the children who would have struggled without the additional support. Sensitivity refers to the degree to which the tool is accurate in identifying children who will go on to have difficulty, and specificity to ruling out children who will not.

Research has revealed the latter is a significant problem in early maths screening. In order to avoid missing anyone, even measures considered to have ‘good specificity’ still identify large numbers of false positives.[67] These children are ‘flagged’ at the screening stage as being ‘at-risk’ but manage to ‘catch-up’ without the need for additional support — meaning scarce intervention resources may be directed inefficiently, thereby reducing the intensity available for students who really need the help.

Gated screening is more accurate than at a single-point-in-time screening

One solution to this problem is the use of a ‘gated’ screening process in preference to a single point in time. Gated screening processes require that schools consider multiple sources of information in making intervention decisions. Students identified through the initial ‘gate’ (screen) as being ‘at-risk’ have the opportunity to benefit from increased instructional intensity in the regular classroom.[68] This increased intensity means increased opportunities to respond, which can be achieved through more direct instructional approaches with frequent group responding and/or carefully structured peer practice protocols.

Only those students ‘at-risk’ through the first gate are then tested for the second ‘gate’ (see Figure 4). This identifies students who have failed to respond to the increased intensity, and are therefore likely to need additional support to ‘catch up.’

Figure 4: Representation of ‘gated screening’ process

In order to maximise accuracy, this second ‘gate’ ideally features a measurement instrument different from the first.[69] [70] In measuring number sense, the second gate could have an exclusive or heavier focus on those skills which research has revealed are most predictive for low-achieving children in a given year level. For example, although a universal screener (gate one) for Year1 may feature all three strands with a relatively heavier focus on number relations and number operations, the second gate may focus increasingly on number relations (comparing number symbols or number line estimation), which is especially significant in predicting the achievement of lower achieving students at this age.[71]

Alternatively, the two gates can focus differentially on sensitivity and specificity to increase decision accuracy.[72] In a context where only one reliable screening tool may be available, cut scores with the same ‘number sense’ tool could be manipulated to prioritise sensitivity at the first gate and specificity at the second. In this approach, a wider net is cast at the first gate to minimise the chance of students being ‘missed’ by the screener. Following a period of intensified instruction, the screener is re-administered to only ‘at-risk’ students with a more selective cut-score to rule out those students who have benefited from that instruction and do not require further support at this time.

The underlying principle of gated screening is to triangulate multiple sources of data that measure both the acquisition of predictive skills and knowledge, and the impact of classroom instruction on areas of difficulty. When implemented effectively, gated screening results in more accurate classification decisions than ‘single point in time’ screening, especially where there is a high base-level of risk in a student population; i.e. many students are likely to be identified as at-risk.[73] [74] Where gated screening leads to increased instructional intensity after the first gate, this benefits all students in addition to improving the functioning of the screening.

Efficiency

Because education systems, like health systems, have scarce resources: [75]

“[they] are faced with opportunity costs; this means that any investment in a screening tool will come at the cost of other health services to the detriment of those patients who would have been treated”

Time and money spent testing is time not spent teaching, just as resources spent on health screening are therefore not available for patient care. Hence, screening tools must be efficient.

Informing instructional actions

There is one further lesson from health which has direct relevance to education:[76]

“… treating a disease at an early stage only makes sense if it leads to a better health outcome than treating it at a later stage.”

Access to educational screening tools which lead to specific instructional actions is similarly important in raising achievement. In a synthesis of 21 research studies concerning students with special needs (which in the USA includes those with Specific Learning Disorders in reading, writing and mathematics), it was found that systematically collecting data to inform instruction increased achievement generally. However, the effects were twice as large when teachers attended to decision rules made before the assessment was administered, rather than using their own judgement to decide how to respond to the results afterwards.[77] Therefore, effective screening tools should have clear decision rules and lead to particular instructional actions.

Two main schools of thought in predicting early numeracy success

The first task is to determine the skills and abilities which should be the focus of screening tools, as most predictive of mathematical success. The goal of screening is to identify those students who, without additional support, would likely go on to score below proficiency levels in subsequent tests such as NAPLAN and TIMSS. This information then enables schools and teachers to appropriately target support.

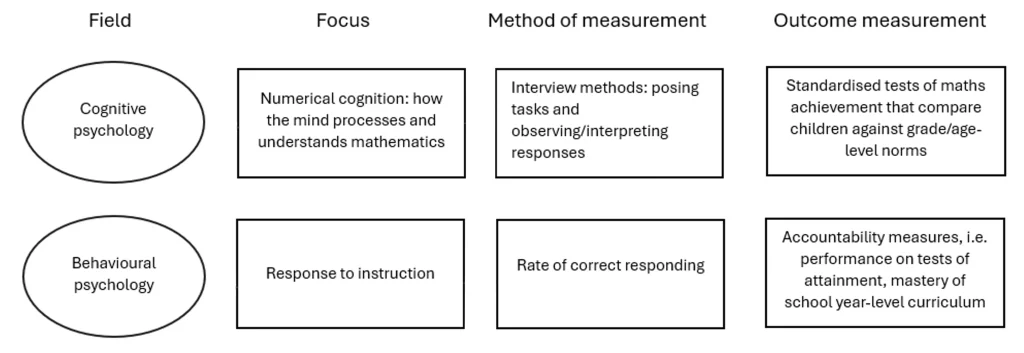

Efforts have primarily come from two fields: cognitive psychology and behavioural psychology.

Cognitive psychologists are focused on numerical cognition: the neural and cognitive mechanisms underlying our ability to understand and use numerical information.[78] Thus, relevant research from this field has focused at least in part on the search for a ‘core deficit’ that underlies later difficulties in numeracy.

It has long been established that what we refer to as ‘learning’ is the result of complex interactions between internal characteristics and environmental conditions. Although we all learn with the same cognitive architecture, there are significant differences between individuals in the functioning of this architecture. These differences can affect the ease with which we acquire the knowledge and skills that schools are tasked with teaching to children. When such a difference has a significant impact on the learning of a core body of knowledge such as reading or mathematics, it can be described as a ‘core deficit.’

In early reading assessment, a significant body of research and practice has concluded that phonological processing (proficiency in processing the sound structure of language) represents a ‘core deficit’ because this capability enables the acquisition of a cascade of skills needed for proficient reading. However, there is unlikely to be one single ‘core deficit’ in mathematics that is responsible for poor mathematics achievement. The core skills and knowledge for mathematics change as the demands and nature of mathematics change throughout schooling.

In contrast to the cognitive psychologists’ approach, behavioural psychology focuses on learning as an observable change in behaviour in response to environmental stimuli.[79] Hence, this research focuses on the measurement of learning at different stages of schooling and on measuring growth in response to instructional conditions.

In mathematics, unlike reading, it is unlikely there is a single General Outcome Measure — a quick and reliable indicator of overall competency — that is valid in measuring mathematics achievement across time. The skills most representative of overall maths achievement, and most predictive of future maths achievement, differ depending on stage of learning and therefore schooling.

Figure 5: Schematic representation of the approaches of cognitive and behavioural psychology fields to screening research in mathematics

Cognitive psychology: The search for a core deficit

The search for a core deficit in numeracy parallels the work done in literacy which has revealed that phonological processing deficits (sensitivity to and use of the sound structure of spoken language, which includes the ability to isolate individual sounds in speech) appear to be at the root of most reading difficulties.[80] Research on early literacy learning has consistently revealed the importance of skills related to the mapping of letters and letter combinations onto these sounds for early literacy instruction.[81] [82] [83] These mapping skills are reliable predictors of early reading development, which has aided the development of tools for identifying students at risk of difficulties such as the Year 1 Phonics Screening Check.

Figure 6: Sample of symbolic vs non-symbolic comparison tasks from the Numeracy Screener (Numerical Cognition Laboratory, 2024)

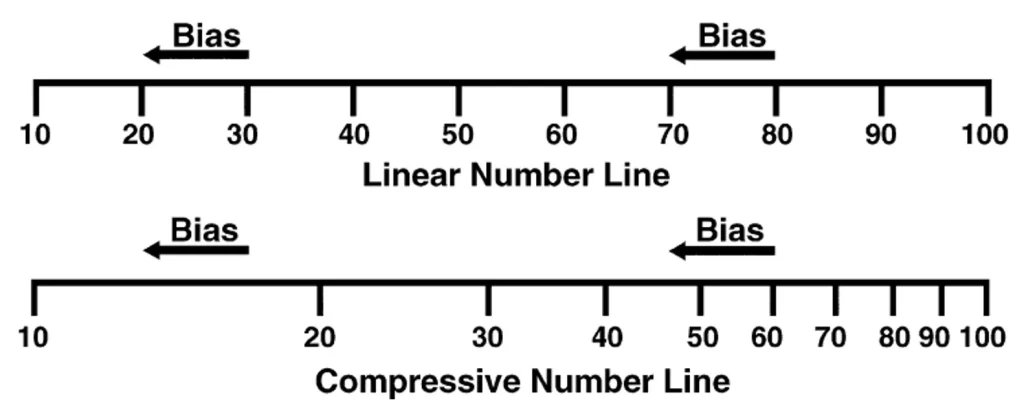

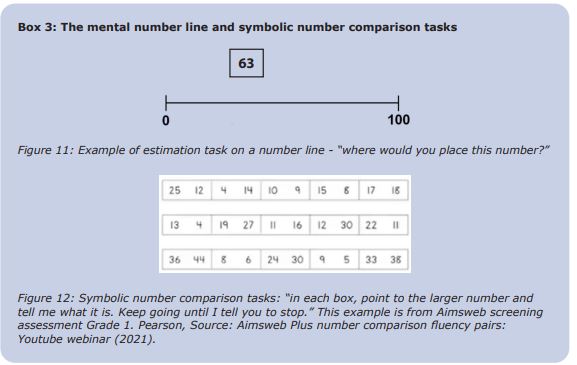

Cognitive psychologists have attempted to learn about the core components that enable skill with numbers, largely using individual interview techniques. Symbolic number processing and the Mental Number Line have been investigated as possible candidates for a core deficit in numeracy, enabling children to understand abstract mathematics. The speed at which children process (compare) numerals (and not quantities, i.e. shown with dots)[84] is predictive of their future success with mathematics. This ability is thought to rely on a mental structure called a Mental Number Line.

The human mind has been shown to develop a spatial representation of numbers, on a line with smaller numbers on the left and larger numbers on the right.[85] While humans are born with certain quantitative abilities, the development of an increasingly accurate mental number line occurs in response to formal mathematics instruction and is thought to enable students to learn arithmetic[86] and even underlie later success with fraction concepts and procedures.[87] This spatial representation of number magnitude is at least partly responsible for the strong relationship between early spatial abilities and mathematics development.[88] [89]

In the early years of schooling, students’ mental representation of the number line overestimates the distance between smaller numbers and compresses the distance between larger numbers. The development of a more linear mental number line where values are equally spaced has been shown to be reliably related to mathematics achievement in the early years of school (see Figure 7).[90] [91]

Figure 7: Linear vs non-linear representation of a number line (make a version of this which starts from zero and is more logarithmic without the ‘bias’ labels)

There is conflicting evidence as to whether a deficit in number magnitude processing is unique to students with persistent mathematical difficulties, diagnosed as Mathematical Learning Disabilities or a Specific Learning Disorder in Mathematics.[92] [93] Although number line representations remain important, these same skills have very little influence on students’ mathematics achievement by the time they reach upper primary school.[94] While whole number knowledge and arithmetic are most important in the early grades, fraction knowledge is a key predictor in later grades.[95]

Behavioural psychology: The search for a General Outcome Measure

Behavioural psychology has largely focused on the development of tools known as Curriculum-Based Measurement (CBM). Due to the need for measures to detect growth in response to instruction, CBMs have a heavy focus on measuring fluency as indicated by rate of correct responding (measures are timed). The fluency with which a skill is performed is related to the student’s stage of learning within an instructional hierarchy which begins with acquisition and progresses to fluency building and finally mastery – enabling retention and application of the learned skill.[96] Therefore, measuring the rate at which a skill is performed can give insight into whether the student is still acquiring the skill, is ready for independent practice, or has mastered it and is capable of applying it across contexts.

In reading, acquisition and mastery of the alphabetic code knowledge for reading and spelling is the gateway to students engaging productively with text. It facilitates the application of higher level skills such as reading comprehension and written composition. This means that, across a broad range of ages, a single measure of oral reading fluency (ORF: measured as words read correctly per minute on a grade level text) can be used as a General Outcome Measure (GOM) — a quick and reliable indicator of overall reading achievement. Such measures are very useful for screening for early difficulties, and efficiently monitoring progress over time including all the grade levels during which children are learning to read. In reading, educators can use a brief oral reading fluency measure to gain reliable information about students’ overall reading progress, in the same way a doctor might take your temperature to see if you have an infection (but without revealing the source or nature of the infection).

However, in mathematics education, the search for a single GOM has not been successful. While some researchers have developed measures that sample across the different skills and concepts taught in each year-level curriculum to attempt to monitor progress, [97] concerns have been raised about the measures’ sensitivity in detecting early difficulties.[98] In order to measure growth across the year, these measures must necessarily include a large amount of content that has not yet been taught, meaning many children are expected to score poorly in the first half of the year. This creates a ‘floor effect’ in the data or a restricted score distribution that weakens the sensitivity of the scores to reflect risk. The incapacity of these scores to reflect risk in the first half of the year is especially problematic because that is when the risk decision is most critical while there is time to deliver intervention. Further, because the measures include such a broad range of skills, they are incapable of detecting short-term growth from instructional interventions which may only focus on a very small subset of what is tested on a GOM.

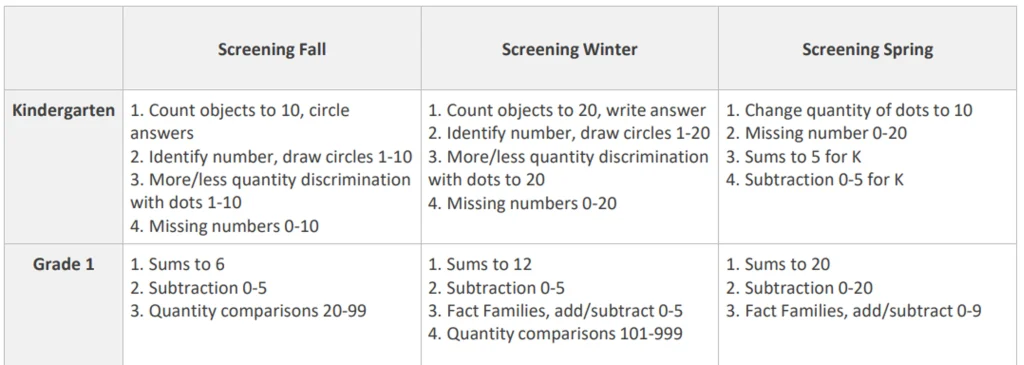

One solution to this challenge has been the use of Mastery Measurement to measure the acquisition of more specific sets of skills.[99] Such measures have been referred to as ‘Goldilocks measures’ and have been shown to be highly reliable for identifying more global mathematics risk.[100] Further, such measures are highly sensitive when used for screening[101] and intervention progress monitoring.[102] Mastery measurement requires that skills targeted for assessment must be closely related to the skills expected to be mastered during the course of the instructional year. When a student reaches mastery on a given assessment, then the assessment should progress to the next logical benchmark skill.

An example of skills targeted for Mastery Measurement in early levels of the SpringMath program is shown in Figure 8.

Figure 8: Skills targeted for screening in SpringMath (from www.springmath.com)

Measuring aspects of ‘number sense’ in the early years of primary school can effectively guide early maths screening

Despite different methodologies, both cognitive and behavioural psychology fields have come to remarkably similar conclusions regarding the maths skills and knowledge that predict later achievement. In particular, a number of recent systematic reviews and meta-analyses bring together the conclusions of hundreds of studies involving thousands of children.

Although the measures used by different researchers are varied, they are consistent in their attention to three interrelated bodies of knowledge about number. These same ‘subdomains’ reflect both what children know and understand and what they are able to demonstrate to inform decision making. These key aspects of core number knowledge and skill were identified by the National Research Council (NRC) in their comprehensive report Mathematics Learning in Early Childhood: Paths towards excellence and equity.[103] They are number, number relations and number operations, all of which contribute both independently and collectively to predicting future mathematical success.[104] [105] They have collectively been termed ‘number sense.’

‘Number sense’ is generally acknowledged to be essential for success in early mathematics. The term, however, means different things to different people in different fields. While the report of the Expert Advisory Panel on the National Year 1 Literacy and Numeracy Check[106] recommended ‘number sense’ as a focus for screening and emphasised its importance, they did not define it in any operational way. This review of the literature therefore builds on that earlier recommendation by defining number sense and the components which predict mathematical achievement.

‘Number sense’ is a popular but somewhat ‘muddy’ term. Some use it to describe the intuitive knowledge about number we have from infancy, termed ‘biologically primary’ knowledge, and others as a more complex set of competencies involving numerical reasoning and reliant on instruction, known as ‘biologically secondary’ knowledge.[107] Nevertheless, it is a useful construct due to the large amount of research that exists about its components and its high visibility as an essential component for screening and instruction. For the purposes of this report, ‘number sense’ describes the three domains of number, number relations and number operations discussed above and represented in Figure 9.

Figure 9: Components of Number Sense as visualised by Jordan, Devlin and Botello. Source: Core foundations of early mathematics: refining the number sense framework. Current Opinion in Behavioral Sciences (2022)

The National Research Council’s three domains of number, number relations and number operations are a useful framework through which we can examine measures which effectively predict mathematics achievement.

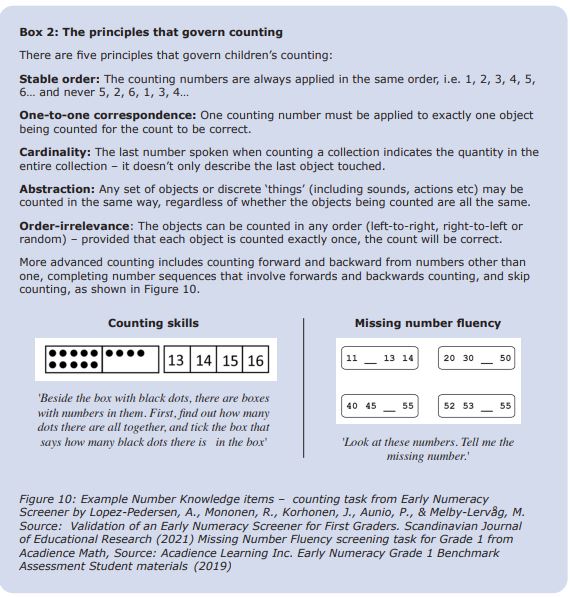

Number

According to the NRC, ‘number core’ knowledge includes the ability to recognise and write number symbols, match these to sets of objects and understand principles that govern counting. Key skills are having fluency with the number word list, one-to-one correspondence and cardinality (see Box 3 for explanations). A number of these aspects of number knowledge are reliable predictors of later achievement. Children’s counting skills including reciting number sequences, filling in missing numbers from sequences of numbers, counting sets and showing awareness of the principles which govern counting, predict later achievement,[108] [109] [110] with progression through a skill hierarchy reflecting more sophisticated strategies (e.g. counting from a number other than one) more predictive in the long term than simple counting.[111]

The ability to accurately identify and write numbers, usually single digit numbers, is a useful measure particularly in the early years.[112] [113] [114] Subitising – the ability to recognise small sets of objects without counting – has also been identified as a useful predictor in studies focused on the preschool years.[115]

Number relations

The number relations subdomain concerns the comparison of quantities and numbers, and reasoning in terms of more, less and the same. Cognitive scientists have focused significantly on measuring both informal, approximate systems of number and more accurate representations of a mental number line. In measuring the accuracy of the informal abilities, known as the Approximate Number System (ANS), children are asked to judge the greater of two collections of dots, with the task becoming harder as the ratio of the values decreases. The ANS has shown value in predicting mathematics achievement, but not to the same extent as more formal, exact and symbolic systems for comparing number such as comparing numerals to say which is more, or plotting a number accurately on a ‘bounded’ (marked at both ends) number line.[116] [117] [118] [119] Examples of these tasks are shown in Box 4.

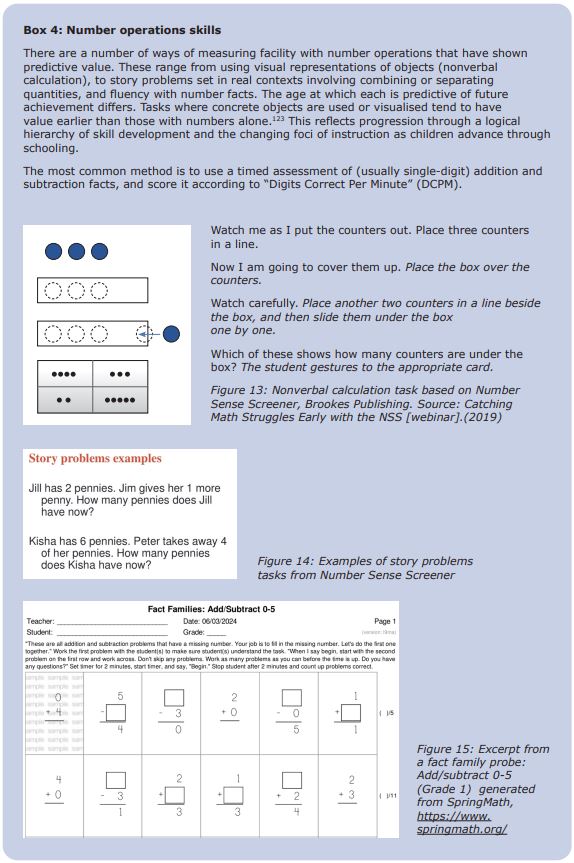

Number operations

The subdomain of number operations includes working with physical objects as well as mental representations of quantities and working solely with number symbols. Some studies have shown that even before students are able to work with number symbols, their nonverbal calculation abilities are related to future mathematical success.[120] [121] [122] For example, shown a collection of three counters which is then covered by a box, the child observes the interviewer putting another counter underneath the box and then is asked how many will be under the box now. Students can respond either verbally or by making/identifying a collection the same size as the one that is under the box. This minimises the influence of language skills on performance. Following the start of formal schooling, the utility of timed written measures of addition and subtraction with single digits to predict maths achievement has a strong research base.[123] [124] Box 5 contains examples of tasks that have been used successfully in screening to measure number operations skills.

General considerations for universal maths screening

Composite measures or single skill measures

The research reviewed that a broad range of skills and competencies can be used to predict maths achievement. Surprisingly, screeners that sample a broader number or range of measures are not necessarily more accurate in classifying students’ at-risk status. Especially in the primary school grades, it is possible to predict maths achievement with reasonable accuracy with carefully targeted single-skill mastery measures,[126] although some research cautions that single skill measures have a tendency to over-identify risk.[127]

The brevity of such an approach would certainly be appealing from an efficiency point of view. In fact, instructional programs based on sequences of carefully planned brief measures have been highly successful in identifying struggling students and raising achievement.[128] However, in the absence of such a systematic approach in Australia, overly brief measures may provide insufficient information to inform teaching in productive ways. Selecting a screening approach for Australian schools that will positively influence instructional emphases in addition to identifying at-risk students is a high priority. Multiple-skill measures structured around a teachable construct such as ‘number sense’ are likely to have a greater positive impact on the instructional practices of Australian teachers.

Different skills are predictive at different times

Although the measurement of skills and knowledge in the three domains referred to above as ‘number sense’ is predictive across the early years of school, the relative value of each changes depending on schooling level/age. While simpler skills such as knowledge of the counting sequence, number magnitude and number identification are strong predictors in the early years of school, calculation and word problem-solving are stronger predictors in the Primary School grades.[129]

Similarly, the predictive value of different skills is dependent on student expertise, with lower achievers in the early years more influenced by number knowledge and relations, and higher achievers by skills in number operations.[130] This reflects the fact that learning in mathematics is hierarchical, with the development and application of more complex skills dependent on a solid foundation of number knowledge.

The impact of domain-general skills also reduces in comparison to domain-specific skills (i.e. maths-related skills) as students progress in their schooling. Working memory — and in particular, spatial abilities — while predictive of maths success in the early years, have less impact in later primary school. [131] This means prior knowledge in mathematics becomes increasingly important as the demands of the maths curriculum increase. However, cognitive traits continue to play a role in the rate of mathematical learning throughout primary school.[132] [133]

Different number ranges and representations are predictive at different times

While all three subdomains of number sense are significant across the early years of development, research has demonstrated there is a developmental sequence in which children are able to apply knowledge in these domains — both with respect to number range and representation.[134] In the preschool years, children’s number sense is initially heavily influenced by working with non-symbolic representations of quantities within their subitising range (1-4). Because children are able to understand the cardinality (how many) of these sets through subitising, they are capable of demonstrating knowledge with number relations and simple operations with concrete materials in this range prior to extrapolating that knowledge to progressively larger sets.

At the Foundation and Year 1 levels, facility with symbolic number in these domains becomes increasingly important in predicting future success. Hence, screeners feature an increasing weighting towards tasks with numerals as students progress through school. In addition, predictive tasks reflect a progressive increase in the number range to which students can apply their number sense (specifically number and number relations subdomains); from numbers to 20 in Foundation, to 100 in Year 1.

Different skills/competencies are predictive of short-term vs longer term achievement

Research has revealed different aspects of number competence measured in the early years predict short- versus long-term achievement. More intuitive number skills — such as the Approximate Number System (ANS), measured by comparing two significantly different quantities of dots — are more predictive of maths achievement in the early years of schooling. In contrast, success in later primary school is more dependent on arithmetic knowledge such as calculations and using arithmetical operations.[135] The implication is that while intuitive skills lay the groundwork for initial learning about number, they are insufficient for longer-term success with school mathematics which relies more on facility with precise arithmetic.

Therefore, screening assessments at different year levels should be customised to the stage of schooling; measures should focus on skills that are both most predictive but also most relevant to educational decision making at that stage.

Fluency is more sensitive than accuracy

Having established what to measure, we then need to consider how. Is it enough to be accurate with skills, or do children also need to be fluent, in order to succeed in later mathematics? How do we ensure we are measuring these skills efficiently, in ways that lead to accurate decisions about who is at-risk?

Most research studies which have confirmed the utility of early maths screening measures have used timed delivery, collecting data about the rate of correct responding. Some researchers have investigated the relative value of children’s accuracy in responding to these tasks as opposed to their fluency. Fluency research has a long history in education and refers to the efficiency and flexibility with which children can access information that has been learned and find solutions to problems. In mathematics assessment, fluency is most often measured through timed tasks that give a value of how many correct responses have been given in a set timeframe. If children respond more quickly, it is assumed they are therefore using more efficient strategies that demand fewer mental resources.

In timed testing, it is possible for students with the same score to have different profiles of responses. For example, one student might be slow but accurate while another student with the same score responds quickly but makes a large number of errors. Adding an accuracy criterion to the decision making formula would distinguish between these two profiles.

Amanda VanDerHeyden and colleagues recently reviewed the evidence about the value of accuracy and fluency criteria in decision making.[136] Adding an accuracy criterion (percentage correct responses) to the fluency criterion added nothing to the accuracy of screening measures in mathematics. Students who were fast tended to be accurate, and those who were inaccurate tended to be dysfluent. Therefore, students who were dysfluent were at risk.[137] A more detailed diagnostic assessment could then be administered to shed light on the particular strategies and patterns of error being shown by individual children identified as dysfluent, to inform support.

Measuring fluency makes sense as research shows the slow speed of processing numerical information and executing numerical procedures is a hallmark of children with mathematical difficulties (MD). Students with MD are slower to make numerical comparisons (which number is bigger/more)[138] and tend to use slower and more effortful counting procedures for a longer period of time — not switching to retrieval from memory.[139]

Fluency also has the added benefit of being sensitive to changes over time. When measuring accuracy alone, children may reach 100% accuracy in responding, at which point no further growth can be measured. This is known as a ‘ceiling effect’. The highly accurate student may still be very slow and effortful in completing the maths task, which will reduce both their ability to apply that skill in other contexts effectively, and their likelihood of retaining that skill over time.

In contrast, a fluency measure continues to show growth after students have acquired skills (achieved accuracy) and are becoming more efficient in applying them (increasing fluency). This means well-designed screening tools can be used or adapted to measure students’ progress over time and inform decisions about whether students are responding sufficiently to instruction, or whether a change needs to be made.

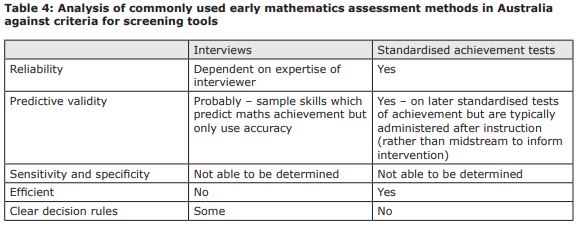

How well do current tools fit criteria as screening measures?

It has been established that effective screening tools have the following characteristics:

- They yield reliable scores

- They generate scores that predict future mathematics difficulty

- Their scores lead to accurate decisions and have sensitivity (don’t miss children at-risk) and specificity (don’t identify children who would catch up anyway). Ideally, screening measures should have the capacity to be repeated or triangulated with other tools to make identification more accurate (gated screening).

- They are easy and efficient to administer (in both time and money)

- They have clear decision rules (classify children as ‘at risk’ and ‘not at-risk’) and have direct implications for instruction

The conceptualisation of a multi-tiered approach to support which encompasses universal screening measures is fairly new to the education landscape in Australia. Therefore, the mathematics assessment tools currently in use in Australia were not designed with the specific intention of screening children for mathematical difficulties – therefore to map them against the criteria for such tools may be considered unfair. However, as they are the dominant tools available to schools for early maths assessment purposes, it is useful to consider to what extent they meet the need for screening.

Are they reliable?

Herbert Ginsburg, a leading researcher in understanding children’s thinking about mathematics, described it this way: [140]

“At the heart of the clinical interview method is a particular kind of flexibility involving the interviewer as measuring instrument. Although usually beginning with some standard problems, often involving concrete objects, the interviewer, observing carefully and interpreting what is observed, has the freedom to alter tasks to promote the child’s understanding and probe his or her reactions; the interviewer is permitted to devise new problems, on the spot, in order to test hypotheses; the interviewer attempts to uncover the thought and concepts underlying the child’s verbalizations. The clinical interview seems to provide rich data that could not be obtained by other means.”

Fundamental to a successful interview is a skilled and well-informed practitioner who can interpret and respond to children’s thinking in real time to gain an accurate picture of their current thinking strategies and misconceptions. This knowledge then enables the educator to give appropriate feedback and support.[141]

On the other hand, a poorly administered diagnostic interview can, at best, give unreliable results; and at worst, distort children’s thinking.[142] Therefore, it is unsurprising that the clinical interview method has become central to research projects intending to develop teacher knowledge about the learning and teaching of mathematics, termed ‘pedagogical content knowledge’. The utility of individual interviews in concert with professional learning for developing teacher knowledge is well established in maths education literature.[143] [144] [145]

Approaches that incorporate the use of individual interviews, such as the Early Numeracy Research Project and Mathematics Recovery, have also recognised the need for significant professional development to ensure teachers are able to use and interpret the interview tools successfully. Such training requires a further investment, which is rarely funded by school systems.

In sum, individual diagnostic interviews can be reliable measures of students’ mathematics abilities, but this is very much dependent on the expertise of the interviewer.

Do they have sufficient predictive validity? Sensitivity and specificity?

Measures that produce scores with good predictive validity are good predictors of later maths achievement. Central to this idea is, of course, the collection of data mid-stream while the opportunity still exists for intervention to be delivered. As stated earlier in this paper, the design of standardised achievement tests as infrequent measures typically administered at the conclusion of an instructional period (e.g. to inform end-of-year reporting) is at odds with the purpose of a screener as ‘predictive’.

Having ‘predictive validity’ may mean the test scores themselves have been shown to predict later maths achievement, or mastery of the skills measured is predictive of later achievement.

According to the research described earlier in this paper, such tools should measure the construct of ‘number sense’: number (including counting), number relations and number operations (including addition and subtraction combinations). However, the complexity of skills within those domains, and the relative value of them, changes as students gain proficiency in maths.[146] Screening measures focused on number knowledge and number relations have a higher predictive validity in the Foundation Year, whereas operations and calculation gain importance in Year 1. Measurement under timed conditions is valuable to indicate fluency.

A number of the tools in use in Australian schools do measure aspects of number sense, particularly interviews.

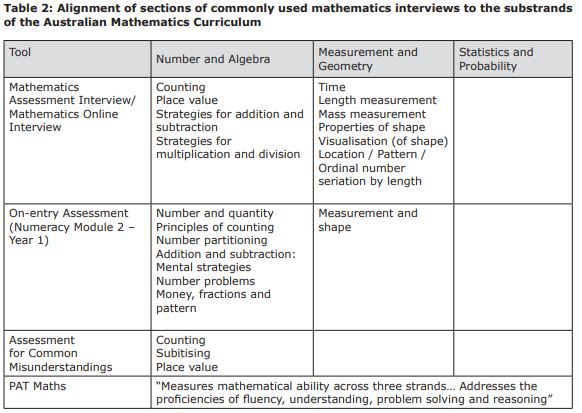

Both interviews and standardised assessments typically collect information across a range of mathematical areas. While many of the interview tools focus on early number skills and number sense, most also gather information across multiple domains of mathematical knowledge including time, measurement and shape (see Table 2).

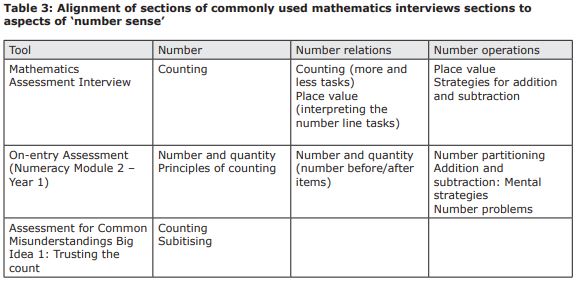

Within the domain of number, current interviews sample some of the skills and knowledge shown to predict mathematical achievement. These include counting, number recognition and addition/subtraction strategies (see Table 3).

Table 2 shows that both the MAI/MOI and On-entry Assessment (Numeracy Module 2) measure skills related to number sense, while the Assessment for Common Misunderstandings measures a subset of these. While the testing of number sequence is fairly common, only the MAI also includes an item targeting number magnitude (plotting a number on a bounded number line), which has been shown to be related to future success with mathematics.

Due to the interview format, none of the tasks in these tools are presented in ways that could measure fluency, which has been identified as a key difference between students with and without mathematical difficulties. Many also include geometry and measurement items that — although undoubtedly useful for teaching — have not been shown to be predictive of future achievement in mathematics.

The Progressive Achievement Tests, when administered at the beginning of high school, have been shown to predict mathematics grades later in high school.[147] Such studies have not been conducted in primary school. The scope of the PAT-Maths is not focused on number sense and samples across all content and proficiency strands, as might be expected of a test primarily designed to measure achievement (rather than risk).

Tools designed for screening assist educators to make decisions about who is at-risk and needs additional support. The tools currently in use in Australian schools were not designed for this purpose, but to gather diagnostic information about children’s current strategies and knowledge (interviews) and maths achievement (standardised achievement tests). While they do include tasks that can reasonably be expected to predict maths achievement, most do not attempt to classify children as at-risk/not at-risk. Those that do have not been studied in ways that enable analysis of decision accuracy.

Despite this limitation, existing tools could still inform an effective screening process if used strategically. In an Australian context where systematic screening processes are still being established, an acceptable second ‘gate’ may be to use existing mathematics assessment tools with students identified as at-risk by the universal screener focused on number sense. Using existing tools in this way would satisfy a core underlying principle of gated approaches to screening: to triangulate data from multiple sources. In this model, such tools would require decision rules so teachers could clearly identify who was at-risk according to the second gate. This process could both increase decision accuracy and reduce the number of students needing more time-intensive assessment methods.

Are they efficient?

According to internationally renowned mathematics and reading instruction expert Dr Russell Gersten,[148] “we understand a good deal more about what comprises a comprehensive assessment battery, but are less certain of the elements of an efficient assessment battery” (p.441). The comprehensive nature of the maths assessment techniques currently in use in Australia and outlined above are a reflection of this challenge.

Using individual interviews to determine mathematical strategies has practical disadvantages. For one, the considerable investment of time (and therefore money). The individual interviews commonly used for early mathematics assessment in Australia take at least 30 minutes per student to administer. Across a class of 24 children, this translates to a loss of around 12-16 instructional hours, at a cost of around $1300-$1600 per classroom (relief teacher cost only). Further time is then required to interpret the interview results and incorporate the implications into classroom planning.

Standardised achievement tests are undoubtedly more efficient to administer, typically requiring a single classroom period. However, further time is then required to train teachers to analyse and interpret results, and then enable them to do so, so that data gathered can be applied to classroom planning.

Do current tools have clear decision rules that lead to instructional actions?

Interview tools differ in the extent to which they supply decision rules. In some systems, a criterion of minimum ‘growth points’ on the MAI is used to determine which children are at risk. If an intervention program (e.g. Extending Mathematical Understanding [EMU]) is offered at that school, students are then recommended for inclusion. In contrast, teachers from WA receive a spreadsheet of On-entry Assessment results without specific guidance on how the information should be used to identify at-risk students or provide support. These decisions are left to the discretion of and are dependent on the expertise and resources of the teacher.

Similarly, standardised achievement tests provide standardised scores that rank students against the scores expected of their age peers. Teachers can broadly see which students are performing above, below and at average levels for their age. Some guidance may be provided as to the sorts of skills that typically benefit students at particular levels of achievement. However, due to the large amount of information provided across such a broad range of concepts and skills, it can be challenging for teachers to know where to start in responding to this data, and no ‘decision rules’ are provided.

Effective screening tools must not only identify who is at risk, but specify an appropriate educational response. In addition to accurately predicting mathematics difficulty, ‘number sense’ is a useful construct for teachers because the skills and knowledge it comprises are teachable and lead to direct implications for classroom teaching and intervention programs. The components of ‘number sense’ that predict mathematics achievement in formal schooling are not those we would consider ‘biologically primary’ (or innate) skills. Knowing the sequence of counting words, counting collections observing the principles of counting, representing collections with numerals and representing/comparing the magnitude of numerals are ‘biologically secondary’ skills which require instruction, as are solving mathematical number problems and completing precise calculations.

Although such learning is usually ‘playful’, children do not learn the skills listed above in societies without formal educational systems. Even where children enter formal schooling already having some of those skills, it is due to the presence of some sort of explicit informal instruction in the home which resembles experiences provided in school settings.[149]