Executive summary

- Every Australian classroom and school rely on the support of instructional materials in delivering lessons, assigning tasks to students, assessing students’ progress, and much more. Over recent years, the materials — especially digital content — available to teachers has increased markedly.

- Amongst the heavy workload faced by Australian teachers is the need for convenient access to useable instructional materials that they can be confident in using effectively. However, most teachers and schools make personal decisions about what materials they use, often without access to centralised and trusted guidance. This adds to the workload burden for teachers as well as to the variability in practice within[1] and between-schools.

- Though all Australian schools and teachers endeavour to make use of high-quality instructional materials (HQIM), there are few markers or indicators of quality that can help aid them in making evidence-based instructional decisions.

- As demonstrated in other industries, standards guide consumer understanding and product quality; ensuring safety and effectiveness. To the extent that such standards exist in Australian education systems, these target teaching practices and student learning expectations, but not the materials used to deliver teaching, assessment, intervention, and so on.

- Certifying instructional materials could boost resource quality and decision-making confidence in schools.

- Quality evaluation of HQIM should be systematic and comprehensive, focusing on evidence-based criteria and peer review to ensure alignment with educational standards and outcomes.

- HQIM policies for structured evaluations and implementation from educational authorities show potential in improving educational outcomes and aiding school decisions.

- Over recent years, international education systems — as demonstrated in the United States and United Kingdom — have developed various frameworks for quality assurance of instructional materials. Though the establishment of the Australian Education Research Organisation (AERO) has done much to disseminate evidence-based practices to educators and policymakers but has not yet guided the use of HQIM.

- Establishing an Australian Standard for HQIM could not only elevate the quality of educational resources but also refine schools’ decision-making processes regarding their selection.

- An Australian model for a HQIM eco-system would be based on three pillars of:

- An Australian Standard for HQIM that focuses on evidence, achievement standards, multi-tiered system of support, assessment and digital efficiency and safety;

- An online HQIM Hub, modelled in a similar way to the US EdReports platform, for the publishing of independent and transparent reviews of HQIM against the Australian Standard;

- Incentives for researchers to focus on scalable, broad-reaching initiatives in HQIM.

- Together, this would improve the quality of evidence-based instructional materials available to schools and teachers, alleviate excess burdens and variability in practices, and support a culture of research-to-practice that will ultimately benefit student outcomes.

Introduction

Recent education reforms in Australia have justifiably concentrated on broad issues such as a national curriculum, the introduction of accountability measures, such as NAPLAN, and the establishment of the national Australian Education Research Organisation.[2]

Yet, there has been considerably less attention paid to instructional materials. The market for these materials has undergone a dramatic transformation due to digital disruption and the surge of open access resources, challenging policymakers, schools and best practices to adapt quickly and effectively.

The Australian Curriculum, or its state-based derivatives, has overarching standards detailing the knowledge and skills expected of students by particular stages of schooling.[3] However, the classroom instructional resources to achieve these outcomes remain largely unstructured, leaving educators to navigate a landscape of largely-unregulated online resources, contemporary educational theories, or unproven materials.

To provide an example, the literacy intervention program Reading Recovery received widespread adoption and investment, despite its lack of a solid evidence base and reliance on the non-explicit Whole Language approach to literacy.[4] This investment wasn’t due to a shortfall of good intentions from educational authorities, school leaders and teachers, but points to the absence of quality information or policy structures that would have allowed more informed decisions at all levels.

Further illustrating this challenge, a recent Grattan Institute survey indicated that less than 30% of Australian teachers were provided or had assistance selecting instructional materials from school leaders, while less than half of respondents indicated there was an expectation that teachers would consistently use the same instructional materials across a learning area.

About a third of teachers were provided no instructional materials at all from their school.[5] With evidence suggesting more than 90% of Australian teachers do not feel they have adequate time to prepare lessons,[6] it is hardly surprising that teachers often resort to unregulated or unvetted sources.

A study from the United States found that when implementing state-based standards, Google searches were the primary resource for almost all teachers surveyed, with many also routinely using unregulated online marketplaces such as TeachersPayTeachers.com for curriculum planning.[7]

Similarly, survey findings from Australian teachers highlight significant gaps in system or departmental support for adopting evidence-based curriculum resources,[8] [9] underscoring a global trend towards unvetted and unregulated resources.

High-Quality Instructional Materials (HQIM) are defined as educational resources that effectively support student learning by aligning with curriculum standards and applying evidence-based teaching and learning approaches. For students to successfully meet Australian Curriculum achievement standards, or beyond, teachers must have access to HQIM that align with these standards.

Furthermore, it could be expected these instructional materials, including e-textbooks, digital content, lesson plans or assessments, would be grounded in a solid evidence base to enhance teaching practices and maximise student learning. Yet despite the importance and the substantial resources provided to Australian schools, classroom instructional materials are not required to meet a defined standard, nor are they subjected to vetting by relevant educational authorities. Identifying and addressing this gap in the quality of instructional material would be an important step forward in elevating the Australian education sector.

This paper seeks, in part, to address this disconnect by leveraging insights from international policy examples for curriculum-aligned HQIM. It will explore initiatives for the establishment of an HQIM ecosystem in Australia for the purpose of scaling evidence-based practice into practical, school-ready instructional resources. This will include the creation of an Australian Standard for HQIM and the independent review of published resources against this standard. Vetting materials against evidence-based criteria aims to build consumer awareness and confidence, while also encouraging researchers and publishers to align their materials with the new standard.

In doing so, the paper will explore the potential for collaboration among education authorities, publishers, researchers and agencies — envisioning a supportive eco-system for educational advancement.

Why Standards Matter

Would standards, through increased scrutiny, enhance the quality of instructional materials, influence schools’ choices and affect what publishers decide to produce? Standardisation may appear as a significant reform choice for classroom materials but a broader view beyond education demonstrates that many products and services are designed, produced and certified to standards. This guides consumers in what they are purchasing, while steering producers to make effective and safe products.[10]

Consider sunscreen as an example: In Australia manufacturers adhere to established criteria for characteristics such as the Sun Protection Factor (SPF), ultraviolet protection and water resistance. Sunscreen manufacturers are required to use certain active ingredients, perform human trials and submit to an approval process by the Therapeutic Goods Administration.[11]

In the education sector, standards are also valued. The Australian Institute for Teaching and School Leadership (AITSL) has established the Australian Professional Standards for Teachers with a focus on Professional Knowledge, Practice and Engagement.

As an example, Standard 1: Know students and how they learn, specifies an expectation that teachers will “structure teaching programs using research and collegial advice about how students learn”, while also using “teaching strategies based on knowledge of students’ physical, social and intellectual development and characteristics”.[12] These standards direct professional development, career progression, performance appraisal and underpin the teaching registration process in each state and territory.

Additionally, there is the previously mentioned Australian Curriculum which provides a national framework for student Achievement Standards from Foundation to Year 10, across eight key Learning Areas. Take this example of an Achievement Standard from the Year 2 Mathematics curriculum: “By the end of Year 2, students order and represent numbers to at least 1000, apply knowledge of place value to partition, rearrange and rename two- and three-digit numbers in terms of their parts and regroup partitioned numbers to assist in calculations’.[13]

To draw together these two standards, the Australian Curriculum Achievement Standards articulates the knowledge and skills a student should demonstrate, such as representing and manipulating three-digit numbers by the end of Year 2. Concurrently, the Professional Standards for Teachers defines the expected teacher practices to achieve these student outcomes, such as structuring teaching programs based on how students learn, though without detailing the precise structure of these programs.

These standards offer Australian school leaders and other bodies the frameworks and guidelines to evaluate student outcomes and teaching practice. Within schools they influence activities such as curriculum planning and professional development. They may even guide the selection of HQIM, whether it be an early years’ literacy program, a secondary maths learning resource or science assessment tool. However, this is largely left to chance when educational standards aren’t extended to the publishers of the instructional materials.

Establishing an Australian Standard for HQIM could not only elevate the quality of educational resources but also refine schools’ decision-making processes regarding their selection.

Materials Matter: International HQIM Examples

Exploring diverse education systems such as the UK and the United States provides insight into how HQIM can be reviewed against criteria-based standards, while also providing direction for a broader Australian HQIM strategy. These countries have similar structured curriculum frameworks with different approaches to implementation.

For instance, the US’s Common Core State Standards is optional and focuses on two subjects only, while the UK has a mandated, broader curriculum. Both countries provide several examples of organisations promoting or reviewing HQIM or broader evidence-based teaching resources. This includes the UK’s Evidence 4 Impact organisation and The Education Endowment Foundation, as well as the US’s National Centre for Intensive intervention.

Among these are two notable examples: the government-initiated Phonics verification process in the UK and the not-for-profit EdReports in the US, that will be explored due to their distinctive approaches to fostering the expansion of HQIM, both at scale and through criteria-based evaluations.

The UK has adopted standardised assessments in literacy and numeracy, similar to Australia’s NAPLAN program.[14] This includes the creation and implementation of a Year 1 Phonics Check which has influenced Australian states such as South Australia and New South Wales to adopt similar approaches. To assist UK schools select HQIM for the teaching of phonics, its Department for Education actively invites publishers of phonics teaching programs to have their resources vetted and approved through a validation process.

To gain approval, publishers must have their resources evaluated against specific criteria to become an approved systematic synthetic phonics teaching program (SSP). Publishers are required to demonstrate that their instructional resources fulfil 16 criteria, including that the program:

- makes up a complete SSP teaching program in full;

- embeds synthetic phonics as the primary approach to decoding print words and not presented in a balanced approach with other strategies;

- provides frequent and ongoing assessment of student progress with accommodations to meet the needs of students at risk;

- includes resources for teachers to effectively deliver content, as well as access to program specific training.[15]

This process for validation of a phonics program largely relies on a self-review process completed by publishers which a panel from the Department for Education examines. The outcomes of the review process are not made public which prevents school leaders comparing the performance of one product against another, based on the 16 criteria.

This approach for vetting HQIM guarantees compliance to a minimum standard but does not provide detailed information that could assist school leaders make more informed choices. To refer to our sunscreen example and standards, this would be the equivalent of manufacturers stating a product has met a minimum level for SPF requirements without specifying that the product was rated at 15+, 30+ or 50+ on the SPF scale and placing the onus on a government panel to disagree.

Publishers can brand their products as approved through the department’s validation process, indicating to school leaders that these materials have undergone evaluation. This scenario mirrors George A. Akerlof’s economic theory The Market for ‘Lemons’, highlighting how asymmetric information between sellers and buyers can lead to market inefficiencies.

In this context, the phonics validation acts as a quality signal, reducing the information gap. Paradoxically, the absence of detailed performance data against the 16 criteria could also lead to adverse selection, where schools cannot discern between merely adequate programs and those of superior quality.[16] With 45 phonics programs now approved,[17] providing detailed performance information could better assist schools when selecting programs.

Image 1: Publisher’s website with a Department for Education approval label. Source: West Berkshire Education, 2024.

Smaller scale examples of this approach exist in Australia, such as The Phonics Initiative in Western Australia, that complements funding for professional learning and resource delivery[18] with approved lists of phonics programs to schools. However, the limitation to one jurisdiction highlights the need for national strategies that can support and scale similar initiatives in all states.

The HQIM landscape in the United States offers insights into how a similar eco-system could be implemented in Australia. Optional adoption for US states to implement the Common Core State Standards (CCSS) has allowed researchers to compare different approaches, including the different ways jurisdictions review and recommend HQIM.

Additionally, education departments and not-for-profits have created structures for the independent and transparent review of materials aligned to the CCSS, creating valuable tools for schools and education authorities. Studies by the RAND Corporation have concluded that — with explicit policy — states can play a role in schools selecting appropriate HQIM to meet curriculum targets through rigorous reviews, incentives and explicit professional development.[19]

For example, the State of Louisiana implemented rigorous, process-driven approaches to the review and selection of HQIM in schools. Reviews were posted online with reference to their alignment to the CCSS. School decision making is further influenced through financial incentives by discounting vetted materials and by offering state-provided, professional learning opportunities for HQIM that meet expectations.[20]

Louisiana’s example was at the forefront of the creation of the HQIM and Professional Development (IMPD) Network, a group of states dedicated to the fostering of HQIM in their schools as a mechanism to meet CCSS objectives. Additional states such as Delaware, Massachusetts, Mississippi, Nebraska, New Mexico, Rhode Island, Tennessee and Wisconsin would proceed to create policy to identify and promote the use of HQIM.

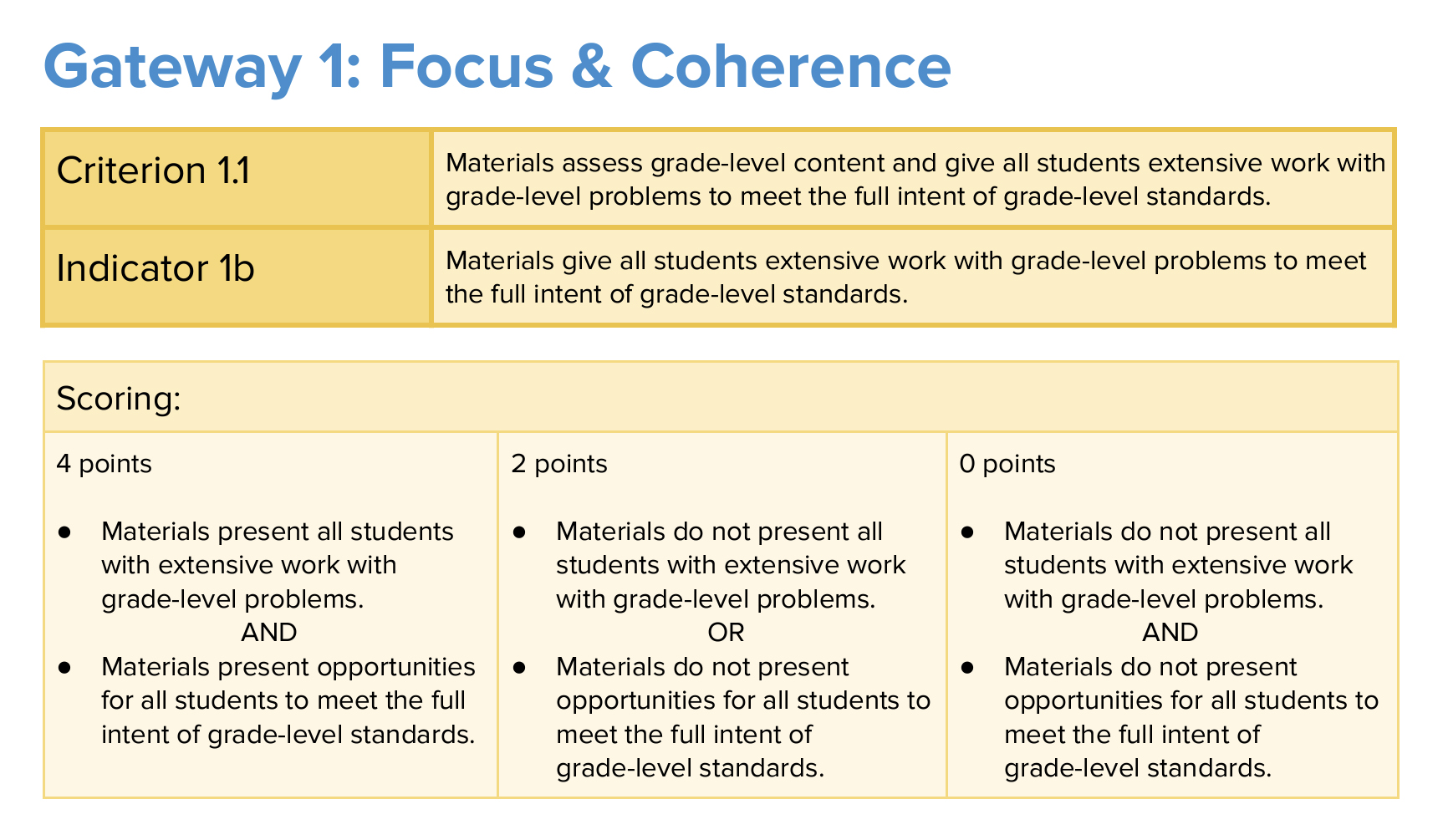

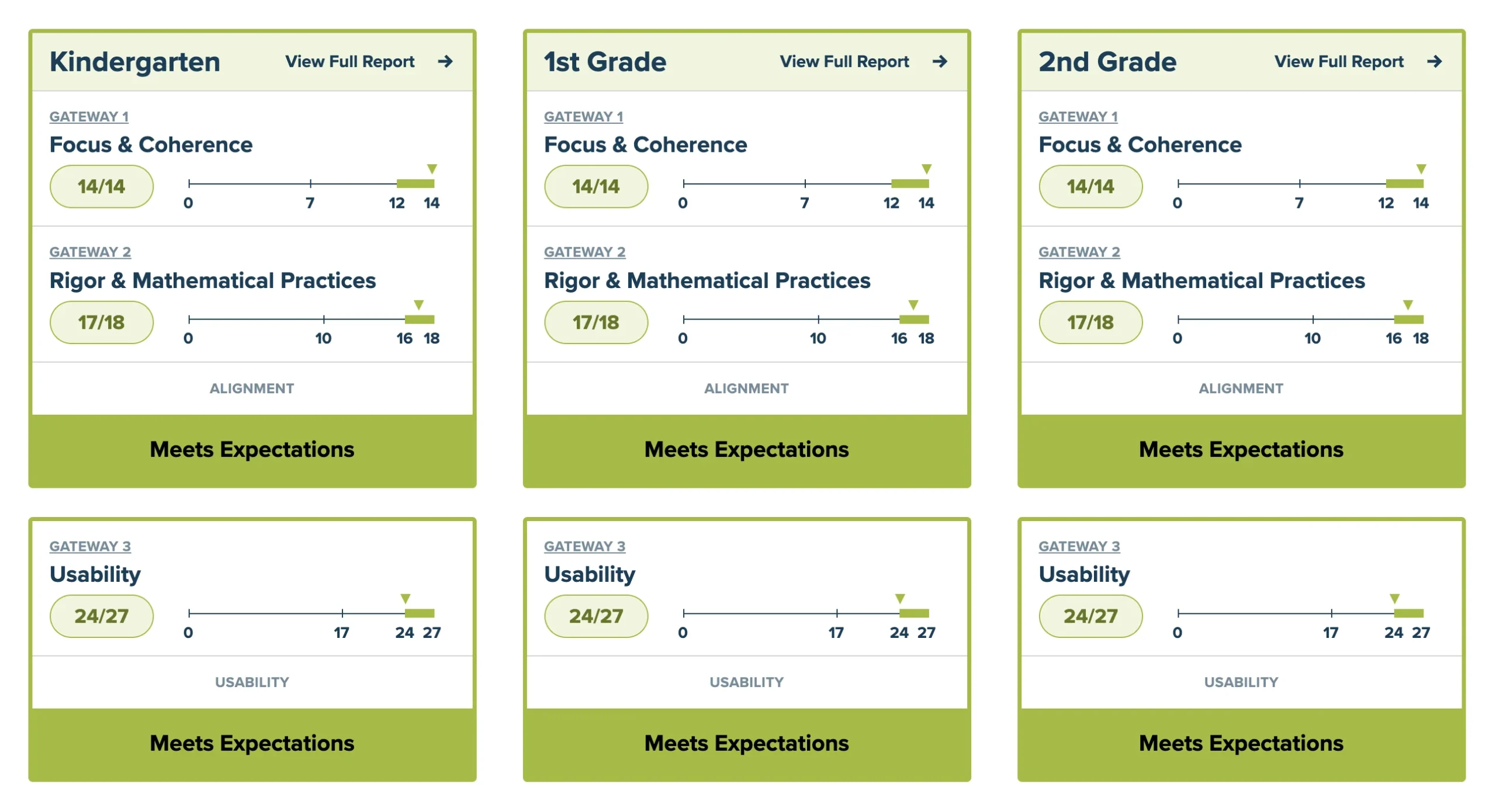

Many of these IMPD member states leverage detailed evaluations of HQIMs from the organisation EdReports. As an independent, not-for-profit organisation, EdReports aims to increase access to HQIM by independently reviewing published materials against specific criteria, including Focus and Coherence, Rigour and Usability.[21] Unlike the UK’s phonics teaching program validation process —which relies heavily on self-assessments from publishers — EdReports publishes full reviews online, based on evaluations by teams of four to five trained educator reviewers.

To achieve this, EdReports employs a gateway system for evaluating all materials, beginning with Focus and Coherence to assess alignment with the CCSS. As demonstrated in Image 2, materials are reviewed against a point system for both grade-level standard alignment and student engagement opportunities. The process results in detailed reports available online, allowing users to search and filter results based on domain, Year level, and review performance. Unlike the UK phonics process that was dependent on publisher submissions, EdReports proactively identifies materials for evaluation through market research and recommendations, ensuring comprehensive and unbiased reviews.

Image 2: Example of an EdReports criteria for Focus and Coherence. Source: EdReports, 2023a.

Image 3: Example of a Mathematics Grades K-2 product review on EdReports, Source: EdReports, 2023b

EdReports has been embedded into education policy for HQIM selection in states such as Ohio and Rhode Island. Other states have partnered with EdReports to create their own websites of selected HQIM that have been curated for their own state’s context, such as the Massachusetts’ CURATE[22] website or a state specific rubric created for schools in Mississippi to review their own materials.[23] EdReports‘ influence extends widely, regardless of a state’s formal implementation of the CCSS or with a strategic focus on vetted HQIM. According to its 2022 annual report, more than 1400 school districts, representing almost 16 million US students, have utilised EdReports reviews to inform their school planning decisions.[24]

EdReports requires an average 100 hours to review a published resource.[25] With more than one thousand reviewed products in its database, this also highlights the unrealistic expectations on how school leaders could make informed decisions without impartial, high-quality guidance.

EdReports is not without detractors, with critics arguing its reviews have too much influence within adopted states, that its review process can be influenced by large publishers that bloat their resources to meet curriculum content at the expense of quality, or that alignment to the CCSS disproportionately outweighs empirical evidence of student learning.[26]

The RAND Corporation research concluded a relationship between the use of vetted materials and the teacher’s knowledge of the Common Core State Standards.[27] This supports the case that a quality, process-driven approach to HQIM selection in Australian schools would help teachers develop Standard 2 of the Professional Standards for teacher practice: Know the content and how to teach it.

A model for an Australian HQIM eco-system

While HQIM strategies have proven beneficial, the mere dissemination of this knowledge is insufficient for achieving widespread, effective implementation in our educational settings. Recognising the complexity of Australian educational systems necessitates a systematic approach to knowledge transfer, ensuring that evidence-based practices are not only understood but also applied on a larger scale. This calls for a framework that supports the continuous, coordinated effort across various educational stakeholders to turn knowledge into actionable strategies.[28]

This raises critical questions such as how can policies and strategies shape a model for a sustainable, flourishing HQIM eco-system in Australia, or which existing structures and organisations could potentially influence publishers and guide educators in the creation and selection of instructional materials?

An effective Australian HQIM eco-system should prioritise the establishment of an Australian Standard for instructional resources, crafted by our pre-existing research organisations. The requirements set out therein should be validated through accessible, independent evaluations of instructional materials, thereby enhancing the decision-making process for schools and supporting the policy efforts of authorities. Finally, these standards should also set the agenda for educational research priorities in Australia.

Create an Australian Standard for HQIM

Overseas examples have demonstrated that the selection of instructional resources is improved through policies that advocate the use of a criteria-based review process, including characteristics of evidence-based instruction, curriculum alignment and on-going student assessment.

The creation of an Australian Standard for HQIM would provide a criteria framework for instructional material quality. To ensure the integrity of this framework, a body such as the Australian Education Research Organisation (AERO) could lead a national process for establishing an Australian standard, in a similar model to AITSL leading the consultation process for Professional Standards for Teachers.[29]

An Australian standard for reviewing HQIM should encompass the following six criteria: Evidence, Curriculum Alignment, Multi-tiered Systems of Support, Professional Development and Support, Assessment for Impact and Digital Efficiency.

Evidence: An evidence base in HQIM refers to either research findings demonstrating the effectiveness of these materials and/or the practical application of existing research into their design. Promoting HQIM grounded in evidence can create the necessary infrastructure and mechanisms for the mobilisation of knowledge and best practices.[30] To interrogate HQIM for evidence, a framework focusing on evidence quality, relevance and methodological rigor is crucial.[31]

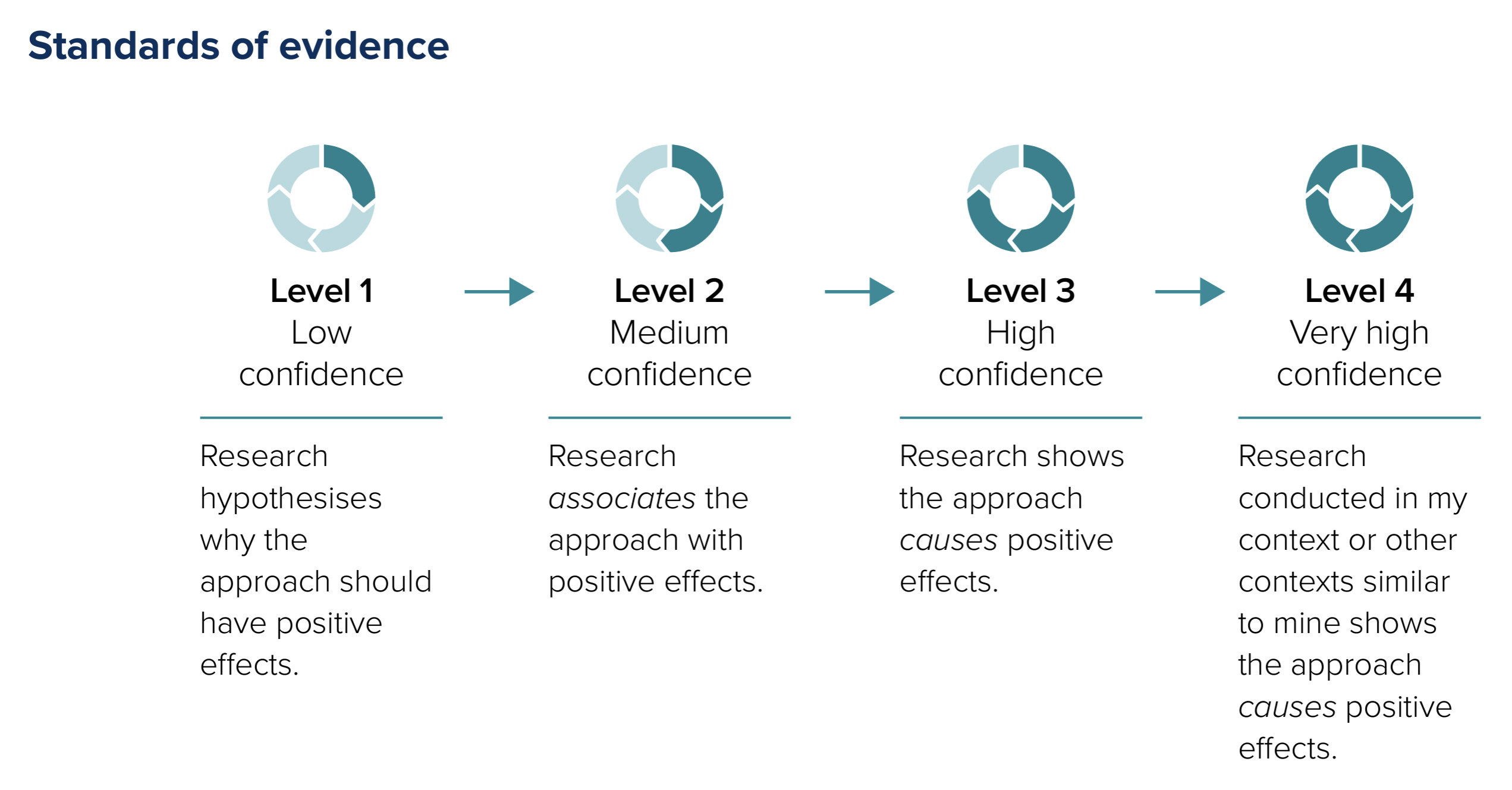

To assist in this process, AERO’s Standards of Evidence offers a framework for assessing such evidence in materials, ensuring they meet criteria for enhancing student learning through quality research and evidence-based design practices.

Image 4: AERO’s standards of evidence. Source: AERO, 2021.

The use of the standards of evidence could assist in determining if instructional resources are founded on quality research to enhance student learning. To draw a practical example, many school leaders will be faced with selecting an instructional literacy intervention program for early-year students who have been identified as not meeting expected progress.

Intervention programs that have demonstrated statistical improvements through pre- and post-test assessments of key literacy skills across a large sample of diverse Australian schools would draw high levels of confidence. Independent, transparent reviews of the most common literacy intervention programs against this scale for evidence could empower school leaders to make more informed choices and reduce resource selection based on chance or influenced by ideological beliefs.

Furthermore, the forementioned Reading Recovery program, if reviewed against this standard of evidence, may have incurred lower levels of confidence due to the limited scale and scope of the research into its efficacy to improve literacy outcomes.[32]

Without this burden of proof, we are left with a position where our example of sunscreen requires a higher threshold of evidence than instructional resources for teaching literacy. Sun protection is important, but so is literacy instruction.

Curriculum Alignment: It is notable the UK mandates that approved phonics teaching programs shall provide a comprehensive approach to instruction and assessment that meets or exceeds the expectations set by its National Curriculum.[33] Similar to the UK, Australian schools are mandated to teach and assess to the Australian Curriculum, or the various state-based equivalents.

Therefore, for resources to qualify as HQIM, resources should be evaluated on their ability to assist teachers to implement and assess students to meet these specific curriculum achievement standards. This is an important consideration in validating that materials fulfil regulatory requirements in aligning to local curriculum standards. It could further add a provision for users who might consider materials that employ a standard that exceeds local curriculum levels.

Teaching phonics is a narrower topic than teaching an entire curriculum domain, and consideration would need to be given to this criteria’s scope. For example, would the standard require publishers to cover every element of a broad domain, or need to consider aspects such as cross curriculum priorities?

Noting the differences in design between the Australian Curriculum and the Common Core State Standards (CCSS), additional justification for this criteria can be taken from the EdReports review methodology which incorporates a pivotal Gateway 1 to evaluate materials for their alignment to academic standards. Should a product fail this initial gateway, the evaluation process ceases, thereby prioritising standard alignment as a key characteristic. This approach would ensure that instructional resources support, and may even surpass, the teaching and assessment benchmarks outlined in our curriculum frameworks.

Multi-tiered systems of support: NAPLAN results from 2023 reveal more than a quarter of Australian students did not reach proficiency in all five domains of Reading, Writing, Spelling, Grammar and Numeracy. Specifically, more than a third of Year 3 students did not meet proficiency levels in Reading, Spelling and Numeracy[34] which could represent as many as nine students in an average Australian classroom. Research indicates that some of these Year 3 students will be as much as two years and five months behind their peers, a gap that may expand to five years by Year 9.[35]

Multi-tiered systems of support (MTSS) is a framework for the delivery of classroom learning that integrates academic supports through tiered levels of intervention, tailored to meet the diverse learning needs of a classroom, including those with reading achievement gaps as previously mentioned.

Within an MTSS framework, students will receive learning support based on their specific needs, utilising systematic assessments to guide the provision of targeted interventions. Strategies can range from small group instruction to one-on-one support to ensure individual student success.[36]

HQIM may be appropriate to one or multiple tiers of instruction within an MTSS framework and publishers may elect to target materials to particular tiers. The curriculum alignment criteria should specify at which tier(s) of instruction the HQIM is suitable.

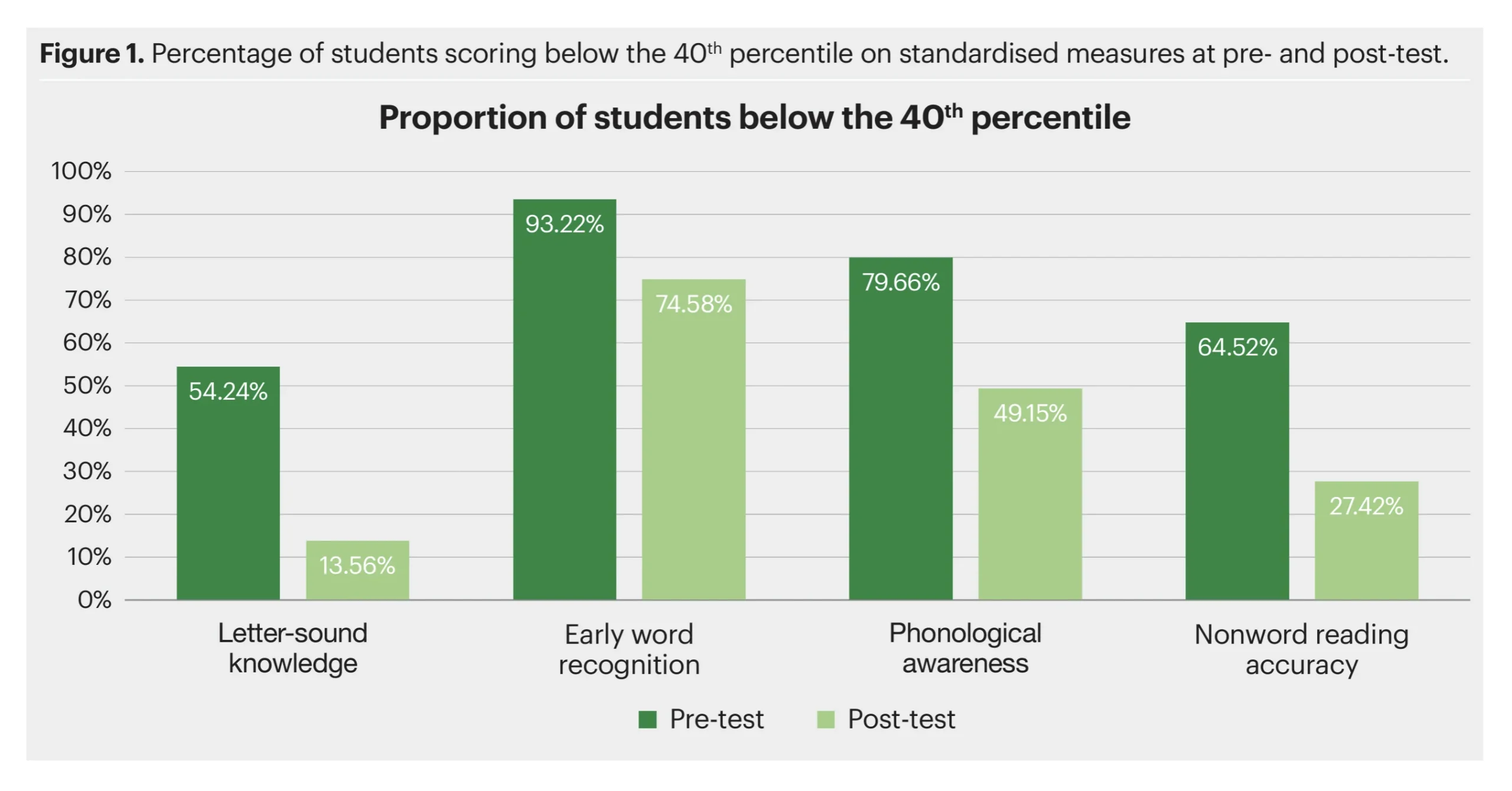

Research on the implementation of HQIM in small groups of students identified to be below benchmarks in literacy has demonstrated improved outcomes, with findings indicating students advancing up to two grade levels within a single academic year.[37] These studies suggest that characteristics of HQIM, regardless of whether an MTSS framework is being used, include being systematic and explicit in their instruction, adaptable to different levels of learner ability and providing opportunities for ongoing assessment.[38]

This approach mirrors a criterion set by the UK for phonics programs, demanding publishers demonstrate how their instructional materials support the instructional needs — including formative assessment — of students in the lowest 20% of achievement.[39]

Image 5: Proportion of students below the 40th percentile for key literacy measures pre and post small group intervention using a published HQIM product. Source: MultiLit, 2021.

Assessment of impact: Assessment serves the singular purpose of understanding where students are in their learning at specific times, enabling teachers to tailor future instruction, set objectives or justify additional support. Assessment data is also a decision-making tool on the impact of school policies and strategies[40] such as the value of instructional materials. This evaluation is illustrated by the pre- and post-test results (Image 5) for intensive literacy support.

Linking assessment with instructional materials aligns teaching with evaluation, fostering precise feedback loops between teachers and students. This alignment not only ensures instruction is directly informed by ongoing formative assessments but also creates a system where assessment data informs instructional strategies, as well as the identification of students needing greater instructional intensity.[41]

This value of assessment is mirrored in UK’s validation criteria for phonics programs which includes frequent and ongoing assessment to map student progress, identify students above or below expected levels and provide appropriate support if required.[42]

Professional Development and Support: For the establishment of an Australian HQIM Standard, it is crucial to assess the availability (which may include, but is not limited to, what a publisher is capable of offering) of professional learning and support. This includes their ability to effectively scale that support across diverse educational settings, such as schools in regional and remote areas, ensuring all educators have the support and knowledge needed for successful program implementation.

The Australian Professional Standards of engagement in professional learning[43] supports the greater necessity for the training and support of teachers in their use of HQIM. Additionally, research from the RAND Corporation has shown that teachers who undergo professional development for HQIMs specifically aligned with the US’s CCSS report greater confidence in their abilities to teach these standards.[44]

Finally, the criteria for the UK’s phonics programs also lists essential guidance and targeted training for teachers, including that it is delivered by highly-skilled professionals across different modalities.[45]

This criterion challenges the notion of what should be included in a HQIM quality framework; is it the quality of the resource in isolation or should broader factors within the education eco-system be considered? It may also challenge smaller providers or publishers who outsource or don’t provide professional learning or support. Additionally it could also provide a challenge as to how this criterion would be evaluated — for example; would the standard address characteristics of professional learning design, mode or efficacy? However, this underlines the point of allowing educators to make informed decisions through greater clarity of information and emphasises the importance of professional learning and support as factors in school leaders’ decisions regarding instructional materials.

Digital Efficiency in Education: Connected and Secure: To improve the educational outcomes and teacher efficiency, schools and educational authorities are embracing technology to refine assessment strategies. According to van den Bosch et al,[46] technology serves as a pivotal instrument for continuous data collection and analysis. It is critical that the digital infrastructure embedded into HQIM, such as online monitoring and feedback tools or the collection and graphing of assessment outcomes, are critiqued.

Furthermore, schools are increasingly required to conduct risk assessments of digital tools, focusing on privacy and security standards[47] requiring skills that frequently fall outside their expertise. A set of criteria for evaluating HQIM within the Australian education context should address both of these issues, taking into account a product’s capacity to:

- facilitate the efficient collection and analysis of student assessment data. This would apply to any HQIM that has already met the previous criteria for assessment of impact. Digital tools can enhance the value of assessment data through effective graphing and benchmarking. This facilitation can range from online data dashboards to simple (yet effective) spreadsheet templates. Such tools not only reduce the administrative load for teachers but also offer meaningful opportunities to analyse assessment data;

- enhance the distribution and use of instructional materials through digital platforms, as appropriate. For instance, an e-textbook for secondary students might include tutorial videos, whereas an early year’s literacy program might provide just the essential PowerPoint slides and teaching notes for lessons. Both approaches support the previously mentioned 90% of teachers who report inadequate planning time;

- evaluate the privacy and security measures of products in managing student, teacher and school information. This aspect could leverage existing frameworks, such as requiring an assessment from the Safer Technologies for Schools (ST4S) program already in place to assess digital technologies as being safe for schools.[48]

Additionally, including digital assessment data in instructional materials could significantly strengthen national objectives by aligning with key Australian educational priorities, such as the AERO Data Linkage initiative. [49]

This initiative aims to enhance research and national policy development by integrating various sets of student data that would typically remain separate.

For instance, with appropriate structures and take up, this may lead to data from a literacy intervention program being evaluated against Year 1 Phonics Screening Check results. Or, in a secondary education context, progress data from an accredited maths resource could be linked with Year 9 NAPLAN numeracy test scores. Such analysis would not only offer solid benchmarks and clear evidence of impact but also might lower the substantial hurdles that researchers and publishers face when conducting effective school-based research.

This final point underscores a critical gap in the HQIM ecosystem — measuring true impact. The digital infrastructure to track student progress could, in small part, enhance our understanding of the real-world effects of instructional materials, acknowledging that HQIM is just one variable in a complex equation.

It would reduce barriers for school leaders and researchers to analyse the connections between assessments embedded in HQIM to broader summative assessments such as academic grades and NAPLAN. It is essential to recognise that digital infrastructures are an integral part of classroom assessment, offering valuable insights that can inform educational strategies and outcomes.

Create an online HQIM Hub

An OECD 2023 report highlights that a significant barrier to engaging with research in educational practice is the lack of time or convenient access to research. Establishing an online HQIM Hub is crucial for reducing these barriers and would contribute to a wider eco-system involving educators, publishers, and researchers. This digital HQIM Hub would follow the example set by the successful US EdReports, featuring transparent evaluations of published materials against the Australian HQIM Standard. To be truly independent, vetting of materials would be completed by a body free of any bias to government or publishers.

Technical and service delivery of such a large digital infrastructure could be operated by an already established body such as Education Services Australia (ESA). A national, non-profit organisation owned by the Australian education ministers, ESA would leverage its technical expertise from managing existing Literacy and Mathematics Hubs, online assessments such as NAPLAN, The Phonics Screening Check and the Year 1 Number Check, as well as administering the forementioned Safer Technologies for Schools program.

The HQIM Hub would feature detailed reviews of each product, evaluating their performance against the specific criteria set by the Australian HQIM Standard. These evaluations will not only verify whether the products meet essential requirements but will also offer insights into how they performed across each criterion. If the Australian HQIM Standard was enacted through the digital hub, then states and other educational authorities could implement this national resource into their local policies and strategies. This approach mirrors the impact of EdReports, where it has been instrumental in US states effectively adopting the CCSS.

Image 6: Concept of an HQIM Hub review.

Building capacity within the educational systems to identify well-designed and evidence-informed resources is a clear priority. Australian states or authorities that already possess approved lists of HQIM, could validate their endorsed or mandated products against those independently reviewed according to the standard, ensuring a high level of quality and adherence to educational goals.

For example, the National Catholic Education Commission’s (NCEC) initiative with Ochre Education to launch the Mastery in Mathematics (MiM) project showcases a targeted, system approach to improving teaching practices through curriculum-aligned resources.[50] Ochre is a national not-for-profit organisation dedicated to providing teachers with accessible, free instructional resources across multiple year levels and learning areas. Through an online platform, Ochre supports educators by offering teaching materials aligned and sequenced to the Australian, Victorian and NSW curriculum.[51] Confidence in these resources, or any other approved by states and educational authorities, could be validated against the Australian HQIM Standard, assisting administrators, schools and publishers alike.

To determine which instructional resources should be reviewed first, priorities should be aligned with national educational goals. An independent panel could decide on these priorities by considering various factors. These might include areas where the greatest need is indicated by NAPLAN results, focusing on resources related to core domains such as English or Mathematics, or prioritising areas of critical importance such as early years literacy instruction. This approach ensures that the review process is both strategic and responsive to the most pressing educational needs.

Incentivise Researchers

Barriers to researchers having a tangible impact on policy include a perceived lack of relevance or meaningful collaboration with relevant sectors.[52] This can be compounded by factors such as researchers favouring publication in academic journals over accessible grey literature, time allowed to collaborate with industry, or the focus on individual studies rather than the synthesis and mobilisation of existing knowledge. To overcome these issues, researchers should be incentivised to understand and meet the questions, problems and contexts concerning education policymakers, school leaders and teachers.[53]

To address these challenges within an Australian HQIM eco-system, it is crucial to increase relevance by incentivising a focus on research that would lead to the development of both scalable and accessible instructional resources for teaching key areas of the Australian Curriculum, particularly English and Mathematics. This could be achieved through the structure and targeted allocation of research grants, including those offered by the Australian Research Council.[54]

As noted by Hunter, Haywood, and Parkinson,[55] much of existing research into the impact of curriculum materials has been conducted internationally and a review of ARC grants over the past decade reveals that a relatively small number of education-related grants have been specifically targeted at teaching the English Curriculum or developing instructional resources in this field.[56]

ARC Education grants often focus on specific, sometimes narrow areas, such as the development of multi-modal, technology-enabled learning spaces or how Australian STEM students engage with Asia. While these initiatives are of undoubted value, the needs of the 30% of Australian students who are not reading at the appropriate Year level standard suggest a more targeted approach could be beneficial. Specifically, there is a compelling case for structuring and targeting grants to:

- focus on the six criteria of the proposed Australian Standard for HQIM, including the emphasis of key curriculum standards;

- explore scalable, broad initiatives designed to benefit numerous schools and students. For instance, researching the most effective ways to provide multi-tiered literacy support to early years’ learners could impact a larger number of students compared to studies focused on how STEM students engage with Asia. This is not a discussion on which topic is more worthy, it’s an equation of need, scale and return on investment;

- measure funded research not only by its publication in academic journals but also through its accessible dissemination to, and partnership building with, relevant educational bodies.

To build greater partnerships within research, a co-production model where educators, policymakers and other stakeholders converge with researchers to collaborate on a targeted solutions may provide greater value.[57] Or, to build a greater research focus on HQIM in industry, programs similar to The Norwegian Public Sector PhD program, which promotes collaboration between academia and the industries by enabling PhD candidates to remain working within their field,[58] could be opened to publishers, departments or industry bodies. While similar schemes have occurred in Australia, such as the National Industry PhD Program, this initiative has yet to consider investment in solutions for K-12 education.[59]

This targeted investment in research could then flow to the broader HQIM eco-system such as the knowledge intermediaries in publishers, research centres, government departments and professional organisations. By synthesising evidence and facilitating the exchange of knowledge, these bodies bridge the gap between research and practice through dissemination, networking, professional learning and advocacy.[60] While the Education Endowment Foundation (EEF) in the UK serves as an exemplary model of a knowledge intermediary, Australian examples such as AERO, Education Departments and Jursidictions may see benefit mobilising the generated knowledge of prioritised research in HQIM.

Conclusion

In this exploration, valuable insight has been gained from not only the importance of Standards in Education but how they can be leveraged to shape markets such as for HQIM. However, international research and models have demonstrated standards in curriculum are only part of the picture in ensuring student achievement. Standards can be focused to influence the decision-making process of schools and the output of publishers when frameworks are in place to ensure their implementation.

While access to HQIM is essential, it is not sufficient on its own. Successful outcomes will always depend on combining these materials with other essential characteristics of quality teaching and learning. Australia is fortunate to have an established Australian Curriculum and Professional Standards for Teachers. These constructs are pivotal to our educational landscape, requiring ongoing discussion and refinement rather than complete overhaul or reinvention. This places Australia in a strong position to face future challenges in the education sector.

The next step for enhancing our education systems involves our leading educational organisations focusing on policy and strategy to develop an HQIM eco-system. This eco-system would rest on several pillars: the establishment of an Australian Standard for HQIM, the creation of an HQIM Hub for independent and transparent review of instructional resources — mirroring the successful example of EdReports in the US — and the encouragement of research contributions to this eco-system.

With thorough consultation and collaboration, the design of an Australian HQIM eco-system may evolve from that presented here. However, by embracing this vision, Australia can learn from successful international examples while building on the strengths of our existing educational assets.

Endnotes

[1] Productivity Commission. (2023). Advancing Prosperity—5-year Productivity Inquiry report. https://www.pc.gov.au/inquiries/completed/productivity/report

[2] Steiner, D., Magee, J., & Jensen, B. (2018). What we teach matters: How quality curriculum improves student outcomes. Learning First. Retrieved from https://learningfirst.com/wp-content/uploads/2020/07/1.-What-we-teach-matters.pdf

[3] Buckingham, J. (2019, February 7). Reading Recovery: A Failed Investment. The Centre for Independent Studies. https://www.cis.org.au/publication/reading-recovery-a-failed-investment/

[4] Hunter, J. Haywood, A. Parkinson, N. (2022). Ending the lesson lottery: Download the survey results. Retrieved March 29, 2024, from https://grattan.edu.au/report/ending-the-lesson-lottery-how-to-im

prove-curriculum-planning-in-schools/

[5] Hunter, J., Sonnemann, J., & Joiner, R. (2022). Making time for great teaching: How better government policy can help. Grattan Institute. https://grattan.edu.au/report/making-time-for-great-teaching-how-better-government-policy-can-help/

[6] Opfer, V. D., Kaufman, J. H., & Thompson, L. E. (2016). Implementation of K–12 State Standards for Mathematics and English Language Arts and Literacy: Findings from the American Teacher Panel. RAND Corporation. https://www.rand.org/pubs/research_reports/RR1529-1.html

[7] Jha, T. (2024). Implementing the science of learning: Teacher experiences (Research Report 47). Centre for Independent Studies

[8] Hunter, J. Haywood, A. Parkinson, N. (2022). Ending the lesson lottery: Download the survey results. Retrieved March 29, 2024, from https://grattan.edu.au/report/ending-the-lesson-lottery-how-to-improve-curriculum-planning-in-schools/

[9] Standards Australia. (n.d.). What we do. Retrieved February 29, 2024, from https://www.standards.org.au/about/what-we-do

[10] Therapeutic Goods Administration (TGA). (2023, June 1). Australian regulatory guidelines for sunscreens (ARGS). Therapeutic Goods Administration (TGA). https://www.tga.gov.au/resources/resource/guidance/australian-regulatory-guidelines-sunscreens-args

[11] Australian Institute for Teaching and School Leadership (AITSL) (n.d.-a). AITSL. Teacher Standards. Retrieved March 1, 2024, from https://www.aitsl.edu.au/standards

[12] Australian Curriculum. (n.d.). Mathematics – Year 2. Retrieved March 1, 2024, from https://v9.australiancurriculum.edu.au/f-10-curriculum/learning-areas/mathematics/year-2

[13] Department for Education. (n.d.). The national curriculum. Retrieved March 3, 2024, from https://www.gov.uk/national-curriculum

[14] Department for Education. (2023). Guidance: Validation of systematic synthetic phonics programmes: supporting documentation. Retrieved March 3, 2024, from https://www.gov.uk/government/publications/phonics-teaching-materials-core-criteria-and-self-assessment/validation-of-systematic-synthetic-phonics-programmes-supporting-documentation#note1

[15] Akerlof, G. A. (1970). The Market for “Lemons”: Quality Uncertainty and the Market Mechanism. The Quarterly Journal of Economics, 84(3), 488–500. https://doi.org/10.2307/1879431

[16] Department for Education. (2023). Guidance: Validation of systematic synthetic phonics programmes: supporting documentation. Retrieved March 3, 2024, from https://www.gov.uk/government/publications/phonics-teaching-materials-core-criteria-and-self-assessment/validation-of-systematic-synthetic-phonics-programmes-supporting-documentation#note1

[17] Department of Education, Western Australia. (n.d.). Phonics initiative. Retrieved from https://www.education.wa.edu.au/phonics-initiative

[18] Kaufman, J. H., Tosh, K., & Mattox, T. (2020). Are U.S. teachers using high-quality instructional materials? Santa Monica, CA: RAND Corporation. Retrieved from https://www.rand.org/pubs/research_reports/RR2575z11-1.html

[19] Kaufman, J. H., Tosh, K., & Mattox, T. (2020). Are U.S. teachers using high-quality instructional materials? Santa Monica, CA: RAND Corporation. Retrieved from https://www.rand.org/pubs/research_reports/RR2575z11-1.html

[20] EdReports. (nd-a). About Us. Retrieved February 29, 2024, from https://edreports.org/about

[21] Massachusetts Department of Elementary and Secondary Education (DESE). (2024). Center for Instructional Support. Retrieved March 3, 2024, from https://www.doe.mass.edu/instruction/curate/default.html

[22] Mississippi Instructional Materials Matter. (2024). ELA Rubrics. Retrieved March 3, 2024, from https://msinstructionalmaterials.org/adopted-materials/ela/ela-rubrics/

[23] EdReports. (2022). 2022 Annual Report. Retrieved February 29, 2024, from https://cdn.edreports.org/media/2023/06/EdReports_2022_Annual_Report_06272023.pdf?_gl=1*4wualp*_gcl_au*NTM0MjkwMTQ0LjE3MDY0MTgzNDU

[24] EdReports. (nd-b). Impact. Retrieved February 29, 2024, from https://www.edreports.org/impact

[25] Wexler, N. (2024). Literacy experts say some EdReports ratings are misleading. Forbes. https://www.forbes.com/sites/nataliewexler/2024/02/22/literacy-experts-say-some-edreports-ratings-are-misleading/?sh=61c1ee4a4128

[26] Kaufman, J. H., Tosh, K., & Mattox, T. (2020). Are U.S. teachers using high-quality instructional materials? Santa Monica, CA: RAND Corporation. Retrieved from https://www.rand.org/pubs/research_reports/RR2575z11-1.html

[27] Best, A., & Holmes, B. (2010). Systems thinking, knowledge and action: towards better models and methods. Evidence & Policy, 6(2), 145–159. https://doi.org/10.1332/174426410X502284

[28] Australian Institute for Teaching and School Leadership (AITSL) (n.d.-b). How we work. Retrieved April 1, 2024, from https://www.aitsl.edu.au/about-aitsl/how-we-work

[29] Best, A., & Holmes, B. (2010). Systems thinking, knowledge and action: towards better models and methods. Evidence & Policy, 6(2), 145–159. https://doi.org/10.1332/174426410X502284

[30] Rickinson, M., Cirkony, C., Walsh, L., Gleeson, J., Cutler, B., & Salisbury, M. (2022). A framework for understanding the quality of evidence use in education. Educational Research (Windsor), 64(2), 133–158. https://doi.org/10.1080/00131881.2022.2054452

[31] Buckingham, J. (2019, February 7). Reading Recovery: A Failed Investment. The Centre for Independent Studies. https://www.cis.org.au/publication/reading-recovery-a-failed-investment/

[32] Department of Education. (2021). Self Assessment Form. https://view.officeapps.live.com/op/view.aspx?src=https%3A%2F%2Fassets.publishing.service.gov.uk%2Fmedia%2F6065d0848fa8f515aa902c44%2FSelf_Assessment_Form_SSP.odt&wdOrigin=BROWSELINK

[33] Australian Curriculum, Assessment and Reporting Authority (ACARA). (2023). NAPLAN National Results. https://www.acara.edu.au/reporting/national-report-on-schooling-in-australia/naplan-national-results

[34] Hunter, J. (2023). Our schools abound in under-achievement. Retrieved February 29, 2024, from https://grattan.edu.au/news/our-schools-abound-in-under-achievement/

[35] de Bruin, K., & Stocker, K. (2021). Multi-Tiered Systems of Support: Comparing Implementation in Primary and Secondary Schools. Learning Difficulties Australia Bulletin; v.53 n.3 p.19-23; December 2021, 53(3), 19–23. https://search.informit.org/doi/10.3316/aeipt.230226

[36] MultiLit. (2021). MiniLit SAGE – Extended research summary. Retrieved February 29, 2024, from https://multilit-ecomm-media.s3.ap-southeast-2.amazonaws.com/wp-content/uploads/2021/11/02103850/MiniLit-Sage_Extended-Research-Summary.pdf

[37] MultiLit. (2021). MiniLit SAGE – Extended research summary. Retrieved February 29, 2024, from https://multilit-ecomm-media.s3.ap-southeast-2.amazonaws.com/wp-content/uploads/2021/11/02103850/MiniLit-Sage_Extended-Research-Summary.pdf

[38] Department of Education. (2021). Self Assessment Form. https://view.officeapps.live.com/op/view.aspx?src=https%3A%2F%2Fassets.publishing.service.gov.uk%2Fmedia%2F6065d0848fa8f515aa902c44%2FSelf_Assessment_Form_SSP.odt&wdOrigin=BROWSELINK

[39] Masters, G. (2014). Assessment: Getting to the essence. Australian Council for Educational Research. Retrieved March 1, 2024, from https://research.acer.edu.au/cgi/viewcontent.cgi?article=1018&context=ar_misc

[40] Fuchs, L. S., Fuchs, D., Hamlett, C. L., & Stecker, P. M. (2021). Bringing Data-Based Individualization to Scale: A Call for the Next-Generation Technology of Teacher Supports. Journal of Learning Disabilities, 54(5), 319–333. https://doi.org/10.1177/0022219420950654

[41] Department for Education. (2023). Guidance: Validation of systematic synthetic phonics programmes: supporting documentation. Retrieved March 3, 2024, from https://www.gov.uk/government/publications/phonics-teaching-materials-core-criteria-and-self-assessment/validation-of-systematic-synthetic-phonics-programmes-supporting-documentation#note1

[42] Australian Institute for Teaching and School Leadership (AITSL) (n.d.-a). AITSL. Teacher Standards. Retrieved March 1, 2024, from https://www.aitsl.edu.au/standards

[43] Opfer, V. D., Kaufman, J. H., & Thompson, L. E. (2016). Implementation of K–12 State Standards for Mathematics and English Language Arts and Literacy: Findings from the American Teacher Panel. RAND Corporation. https://www.rand.org/pubs/research_reports/RR1529-1.html

[44] Department of Education. (2021). Self Assessment Form. https://view.officeapps.live.com/op/view.aspx?src=https%3A%2F%2Fassets.publishing.service.gov.uk%2Fmedia%2F6065d0848fa8f515aa902c44%2FSelf_Assessment_Form_SSP.odt&wdOrigin=BROWSELINK

[45] van den Bosch, R. M., Espin, C. A., Pat-El, R. J., & Saab, N. (2019). Improving Teachers’ Comprehension of Curriculum-Based Measurement Progress-Monitoring Graphs. Journal of Learning Disabilities, 52(5), 413–427. https://doi.org/10.1177/0022219419856013

[46] eSafety Commissioner. (2020). Prepare 3 – New technologies risk-assessment tool. Retrieved March 5, 2024, from https://www.esafety.gov.au/sites/default/files/2020-03/Prepare%203%20-%20New%20technologies%20risk-assessment%20tool.pdf

[47] Education Services Australia. (n.d.). A National Privacy and Security Initiative for Digital Products in K-12 Education. Retrieved from https://st4s.edu.au/

[48] Australian Education Research Organisation (AERO). (2023). AERO’s submission to the review to inform a better and fairer education system. Retrieved March 5, 2024, from https://www.edresearch.edu.au/sites/default/files/2023-08/aero-submission-review-to-inform-better-and-fairer-education-system.pdf

[49] National Catholic Education Commission (NCEC). (2023). Mastery in Mathematics Project. https://ncec.catholic.edu.au/mastery-in-mathematics-project/

[50] Ochre Education. (2024). Adaptable resources created for teachers, by teachers. https://ochre.org.au/

[51] Boswell, C., K. Smith and C. Davies (2022), Promoting Ethical and Effective Policy Engagement in the Higher Education Sector, The Royal Society of Edinburgh, Edinburgh, https://rse.org.uk/

[52] OECD. (2023). Who really cares about using education research in policy and practice?: Developing a culture of research engagement. OECD Publishing. https://doi.org/10.1787/bc641427-en

[53] Australian Research Council. (2024). Grants Search – Grants Data Portal. Retrieved March 5, 2024, from https://dataportal.arc.gov.au/NCGP/Web/Grant/Grants#/20/1/-money//(year-from%3D%222014%22)%20AND(two-digit-for%3D%2213%22)

[54] Hunter, J. Haywood, A. Parkinson, N. (2022). Ending the lesson lottery: Download the survey results. Retrieved March 29, 2024, from https://grattan.edu.au/report/ending-the-lesson-lottery-how-to-improve-curriculum-planning-in-schools/

[55] Australian Research Council. (2024). Grants Search – Grants Data Portal. Retrieved March 5, 2024, from https://dataportal.arc.gov.au/NCGP/Web/Grant/Grants#/20/1/-money//(year-from%3D%222014%22)%20AND(two-digit-for%3D%2213%22)

[56] Boaz, A. (2021). Lost in co-production: To enable true collaboration we need to nurture different academic identities. LSE Impact of Social Sciences. https://blogs.lse.ac.uk/impactofsocialsciences/2021/06/25/lost-in-co-production-to-enable-true-collaboration-we-need-to-nurture-different-academic-identities/[1]

[57] OECD. (2023). Who really cares about using education research in policy and practice?: Developing a culture of research engagement. OECD Publishing. https://doi.org/10.1787/bc641427-en

[58] Department of Education, Skills and Employment. (2024). National Industry PhD Program. Australian Government. https://www.education.gov.au/resources/national-industry-phd-program[2]

[59] Torres, J. M., & Steponavicius, M. (2022). More than just a gobetween: The role of intermediaries in knowledge mobilisation. OECD Education Working Papers, 285, 0_1-58. https://doi.org/10.1787/aa29cfd3-en