Executive Summary

Despite initially being envisaged as a temporary program in response to COVID-19 pandemic school closures, small-group tutoring has attracted a great deal of attention from various policymakers as a potential solution to Australia’s student achievement problem.

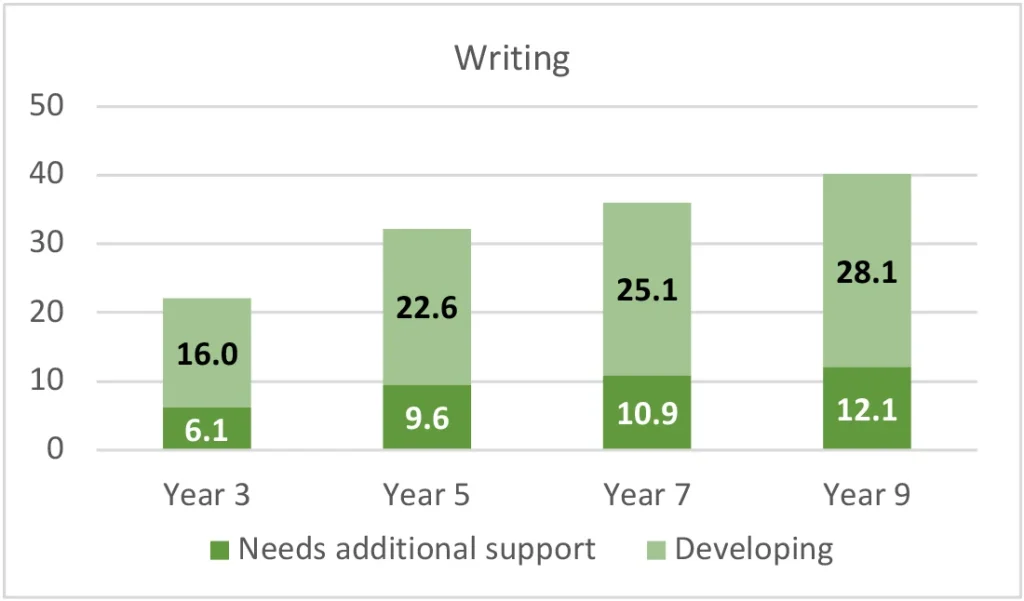

The concern is warranted, as latest NAPLAN data from 2023 show roughly a third of students across all year levels and domains lack proficiency (that is, they are in the Needs Additional Support or Developing categories). Analysis by the Australian Education Research Organisation (AERO) and the Productivity Commission also shows once students fall behind, they are unlikely to catch up.

However, while the appeal of small-group tutoring as a permanent feature of Australian education is understandable, policymakers should carefully consider the evidence to guide decision-making.

COVID-response small-group tutoring was funded by state governments in New South Wales and Victoria. While schools were given some advice about how to implement the program in an evidence-based way, they were ultimately able to implement it however it suited them. Consequently, evaluations of the program in both states showed students in the program made no more progress than their peers.

Tutoring works best if it’s implemented with a systemic approach, as in the Multi-Tiered System of Supports (MTSS). In the work of AERO, MTSS involves:

- Using proven teaching methods for all students.

- Regular testing of all students to identify gaps in learning.

- Delivering frequent small group or 1:1 interventions with a focus on these learning gaps.

- Continuous data-based tracking of student progress to ensure interventions delivered real gains.

However, the evidence suggests that, despite some exceptions, schools generally lack capacity to implement MTSS with fidelity.

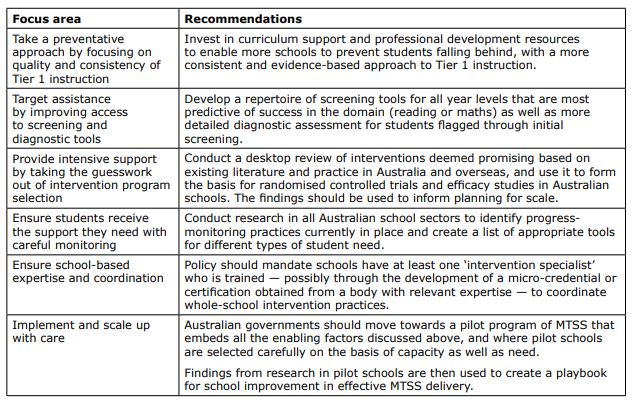

The report advocates the following:

Introduction

As school closures wore on during the COVID-19 pandemic lockdowns, policymakers’ minds turned to how to rectify the learning loss that was seen as inevitable due to prolonged disruption to students’ learning. To help students who fell behind to catch up, the solution seemed obvious: small-group tutoring.

Seeking to solve the ‘COVID problem’, the Grattan Institute published a report in June 2020 advocating a national, $1 billion six-month tutoring blitz to help students catch up, noting that many disadvantaged students — whose academic achievement is already behind their more advantaged peers — were most educationally at-risk. Consequently, the report advocated hiring 100,000 tutors to deliver three to five tutoring sessions a week to 1 million students.[1]

Governments were quick to respond. By October 2020, the Victorian government had announced an initial tranche of $250 million to fund the Tutor Learning Initiative (TLI)[2] and the New South Wales government followed suit in November 2020 with $337 million to fund the COVID Intensive Learning Support Program (ILSP).[3]

The current conversation around tutoring has moved beyond the need for a COVID-related catch-up. Instead, it is increasingly posited as a broader solution to learning gaps. Interviewed by the ABC in relation to the release of 2023’s NAPLAN data in August of that year, Federal Education Minister Jason Clare said “all of the evidence shows that [small-group tutoring is] a key part of helping children who fall behind here to catch up. Those small groups with one teacher and a couple of children and the work that they do over the course of a couple of weeks can have a massive impact on a child’s education.”[4]

In addition, the Better and Fairer review — intended to underpin the next agreement on school funding between the federal government and states and territories — recommended the implementation of small-group catch-up tutoring as part of an overarching Multi-Tiered System of Supports (MTSS) framework. However, while the case for MTSS is compelling, as this paper will show, the current lack of such a systematic approach presents problems for the efficacy of tutoring interventions.

In other words, just because small-group tutoring can and does work in some settings, does not mean that small-group tutoring at scale – potentially servicing hundreds of thousands of children Australia-wide – will necessarily result in positive impact for students and value for money. Indeed, case studies from Australia and overseas show good reason to be sceptical this alone will address concerns around student achievement and learning. If the lessons from these policy efforts are not learned, small-group tutoring will be yet another large spending commitment yielding little benefit.

This paper will first survey data on Australian students’ academic achievement, before reviewing key evidence in support of tutoring and MTSS frameworks for delivery. The paper will then present the limited information about student impact from NSW and Victoria’s COVID-response tutoring and analyse the current feasibility of MTSS application to the Australian context. Finally, the paper outlines implications for policymakers who seek to embed tutoring within an MTSS framework in Australian schools.

1. The student achievement problem

Box 1: Evidence of COVID-related impacts is inconsistent and mixed

With limited data sources, it is difficult to ascertain the impact of COVID-19 disruptions on student learning in NSW and Victoria.

November 2020 data from NSW government schools’ check-in assessments — completed by 86% of Year 3 students, 85% of Year 5 students and 62% of Year 9 students — showed students had fallen approximately 3-4 months behind in Year 3 Reading, and 2-3 months behind in Year 5 Reading and Numeracy and Year 9 Numeracy when compared to historical NAPLAN data.[5]

Conversely, education researchers from the University of Newcastle compared Progressive Achievement Test (PAT) data from 2019 and 2020 from over 4800 Year 3 and 4 students at 113 NSW government schools to show that, on average, there were no significant differences. However, the researchers observed that Year 3 students in more disadvantaged schools in 2020 achieved 2 months’ less growth in Mathematics, whereas their more advantaged peers achieved two months’ additional growth; highlighting a widening achievement gap between different groups of students.[6]

A review of evidence on student achievement during the COVID pandemic commissioned by AERO — which mostly used NSW-related studies — said the evidence around achievement decline or widening learning gaps due to remote learning was inconclusive.[7] Furthermore, NAPLAN scores between 2021 and 2022 remained steady in both states. Unlike other countries, where the impact of COVID disruptions on learning was starkly evident in achievement data, no clear negative impact has emerged from Australian data.

A third of Australian students aren’t proficient

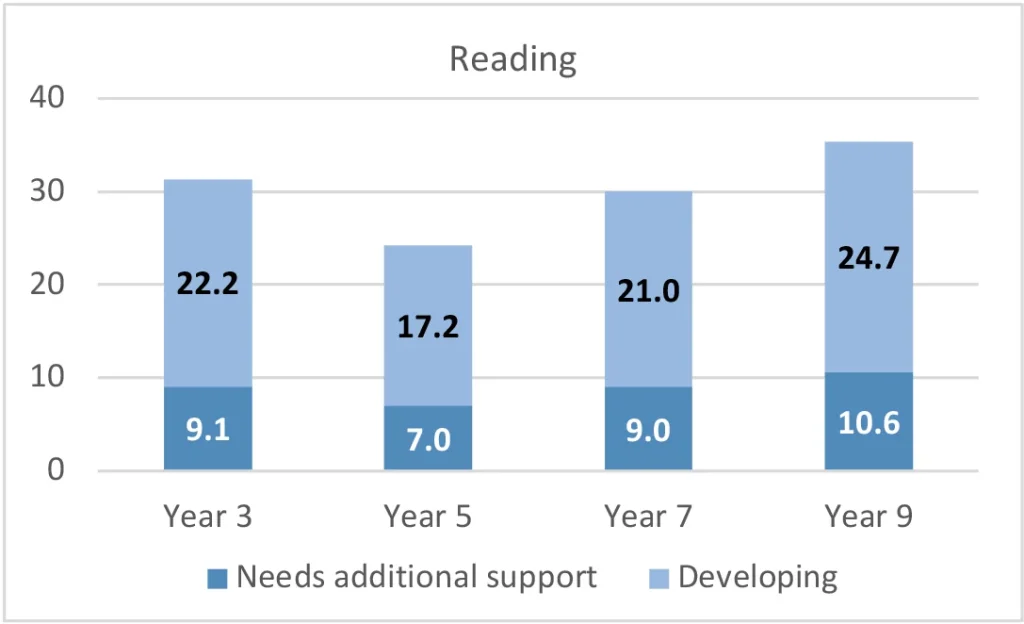

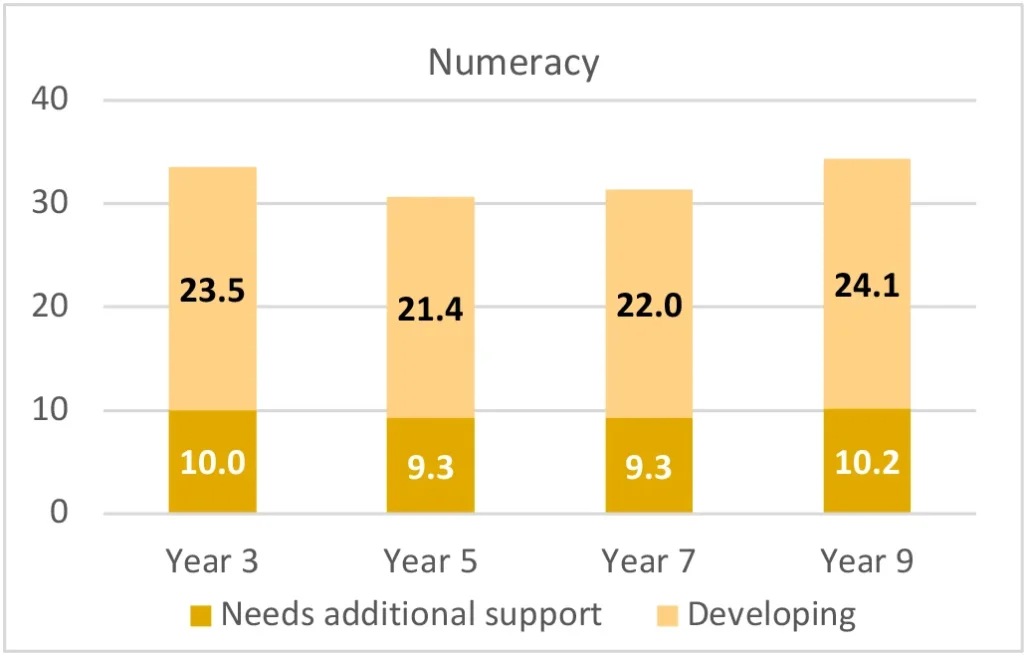

Regardless of any COVID-related impacts (see Box 1), NAPLAN 2023 shows a significant minority of students — around a third — are not meeting proficient standards (defined by ACARA as ‘Strong’ or above) at all year levels and across the main NAPLAN domains, as shown in Figures 1 to 3.

Figures 1-3: Share of students not meeting proficiency in Reading, Numeracy and Writing, all year levels, 2023[8]

Students who fall behind, stay behind as they progress through schooling

In addition, once students fall behind, the evidence suggests the current system is not effective at helping them catch up. Productivity Commission analysis of NAPLAN data showed that of students who were below the NAPLAN national minimum standard in Year 3 numeracy, 34% remained below standard and 48% achieved the standard in Year 5. Similarly, of those below standard in Year 7, 28% remained below the minimum standard in Year 9 and 58% achieved the standard.[9] The Commission did not complete this analysis for Reading or other NAPLAN domains. Similar AERO analysis of students who were at or below the minimum standard in Year 3 reading and numeracy shows roughly a third (37.4% for reading, 33.6% for numeracy) were consistently at this level all the way through to Year 9.[10]

Teachers’ impressions of students’ capacity with core literacy and numeracy skills upon entry into secondary school support the story told by NAPLAN data — overall, about a third are deemed in the eyes of their teachers to lack foundational skills. The Australian Council of Educational Research (ACER)/Australian Education Research Organisation (AERO) survey (based on a non-random sample) of schools reported that teachers perceive an average of 32% of their incoming Year 7 cohort struggled with literacy, which was defined as “[students] who lack the foundational literacy skills required to engage with a secondary curriculum.” Similarly, students struggling with numeracy — defined as “[students] who lack the foundational numeracy skills that are required to engage with a secondary curriculum” — was 33%.[11]

The current reality is that, rather than closing, gaps become entrenched and harder to bridge over time. There is an impetus to find a more effective way to help schools identify and support students who require additional assistance to succeed academically. It is clear why embedding small-group tutoring and/or intervention within the Australian policy landscape more broadly would be appealing to policymakers. However, this would be the wrong path to improving results.

Small-group tutoring is not necessarily a fit-for-purpose solution to this problem

Given the relative consistency of these data points, the high proportion (roughly a third) of students in need of specialised assistance to meet their learning needs is more than the reasonable capacity of a supplementary program such as small-group tutoring to fix.

Not only that, students in the Needs Additional Support and Developing categories of NAPLAN testing are not evenly distributed across the nation, both in terms of the student groups most affected and in geographic area. For instance, almost 50% of Year 9s in outer regional Australia lack proficiency in Reading and Numeracy, and closer to 55% for Writing.[12]

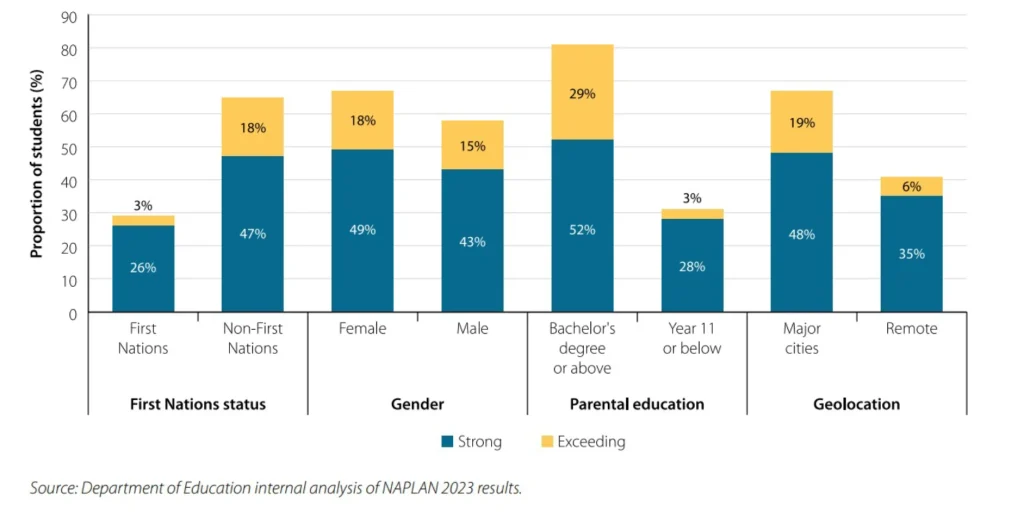

The Better and Fairer Review broke down achievement for Year 9 Reading even further, showing that whether students meet proficiency (reaching Strong and Exceeding) is influenced by several demographic characteristics.

Figure 4: Proportion of students meeting proficiency for Year 9 Reading by First Nations status, gender, parental education, and geolocation 2023

The ACER/AERO survey reveals a similar divide based on geolocation, with metropolitan schools reporting 28% of their Year 7 cohort struggling with literacy and 28% with numeracy, whereas rural schools reported 46% and 49% respectively.[13]

This would mean that further educational resources (i.e. teachers or learning support staff) would need to be found and relocated to areas of high need, when the difficulty of recruiting teachers for hard to staff schools (typically, rural and regional, and government schools in low socio-educational status communities) is already the subject of policy energy.[14]

Though there is a great deal of policy enthusiasm for small-group tutoring, policymakers should proceed with caution. High caseloads of student need — both overall, and about half of students in certain high-needs contexts — mean effective and scaled implementation of small-group tutoring is, at best, difficult and at worst, completely impractical.

2. Understanding small-group tutoring

The term ‘tutoring’ describes any educational activity that is intended to build student achievement in one or more areas, usually in response to assessment data or other evidence that suggests the student is not achieving at the desired level. It is done in addition to — not as a replacement for — regular instruction in the subject(s).[15]

In this broad definition of tutoring, the distinct features are simply that the student is considered ‘behind’ and they are receiving assistance in addition to regular class work to remediate the gaps. This is similar to what occurred in NSW and Victoria during their COVID-era catch-up tutoring programs, discussed in depth later in this paper.

The evidence base for small-group tutoring is large and varied, showing a range of impacts on student outcomes, so considerable nuance is required in translating the findings of research to practice and policy.

Even before COVID, the UK’s Education Endowment Foundation’s Australian partner, Evidence for Learning, described small-group tuition as having up to four additional months’ progress on average over the course of a year, for a low-moderate cost.[16] Significant studies, such as meta-analyses and systematic reviews of well-designed (using experimental or quasi-experimental method) studies show that tutoring can and does work (see Box 2).

Box 2: Significant large-scale studies of tutoring programs

Education Endowment Foundation

The most oft-cited research on small-group tutoring comes from the Education Endowment Foundation’s meta-analysis. The key findings are summarised below:

-

-

-

- The impact in primary schools (+4 months) is greater than secondary schools (+2 months), though the latter also has a smaller volume of total studies;

- The body of evidence skews towards reading, where the impact is greater (+4 months) than for Maths (+3 months);

- The evidence supports frequent sessions of three times a week, up to an hour over about 10 weeks (so, about 30 hours), and

- Students who have low achievement are the largest beneficiaries.

-

-

Nickow et al. (2020)[17]

This systematic review and meta-analysis of randomised evaluations of K-12 tutoring programs found an overall effective size estimate of 0.37 standard deviations (a moderate effect size) with some models being more successful than others:

-

-

-

- Teachers and paraprofessionals were more effective as tutors than non-professionals or parents.

- The term ‘paraprofessional’ includes student teachers and education support staff, with the study noting paraprofessionals can be similarly effective yet less expensive.

- Reading-focused tutoring had larger effects in the earlier grades (preschool to Year 1) but Maths-focused tutoring had larger effects in later grades (Year 2 to Year 5).

- Programs conducted during school hours had larger impacts than those conducted after school.

- Tutoring was most effective three times a week and programs which lasted over 20 weeks (half the school year in Australia) were less effective.

- Teachers and paraprofessionals were more effective as tutors than non-professionals or parents.

-

-

Fryer (2016)[18]

In an analysis of randomised field experiments related to education, this study examines those related to tutoring, divided into high-dosage and low-dosage tutoring. For the purposes of the study, high-dosage tutoring is defined as tutoring in groups of 6 or fewer, for more than 3 days per week or at a rate equating to at least 50 hours over a 36-week period. This is the form most similar to what has been used or proposed in the Australian context.

The meta-coefficient on high-dosage tutoring is 0.309σ (0.106) for math achievement and 0.229σ (0.033) for reading achievement. In standardised terms, these effects represent a relatively large increase in average learning outcomes. The paper notes more than half (54.3%) of coefficients indicated positive treatment effects that were statistically significant.

Campbell Collaboration (2020)[19]

This systematic review by Dietrichson et al. focused on school-based interventions of several kinds for students in years 7-12 with, or deemed at risk of, academic difficulties. Of the 71 studies, 52 were randomised controlled trials. Small-group instruction in particular had one of the largest pooled effect sizes of 0.38SD, regarded as a ‘moderate effect’.

Tutoring can therefore be an effective supplemental support for students whose learning needs have not been met by regular classroom teaching. However, this does not mean it is the most efficient way of ensuring all students make adequate progress. If a third of students are not meeting proficiency standards, it is prohibitive—– from a cost, scheduling and staffing perspective — for small-group tutoring to be the only way to support these students.

With such high proportions of students in need of support and with few catching up in the ordinary course of instruction, there is need for a preventative approach where students are supported to keep up in the context of ordinary whole-class instruction. All students should also be screened to identify learning needs, and supported with proper intervention. Tutoring, broadly conceived, lacks this systematic approach to identifying and responding to student need.

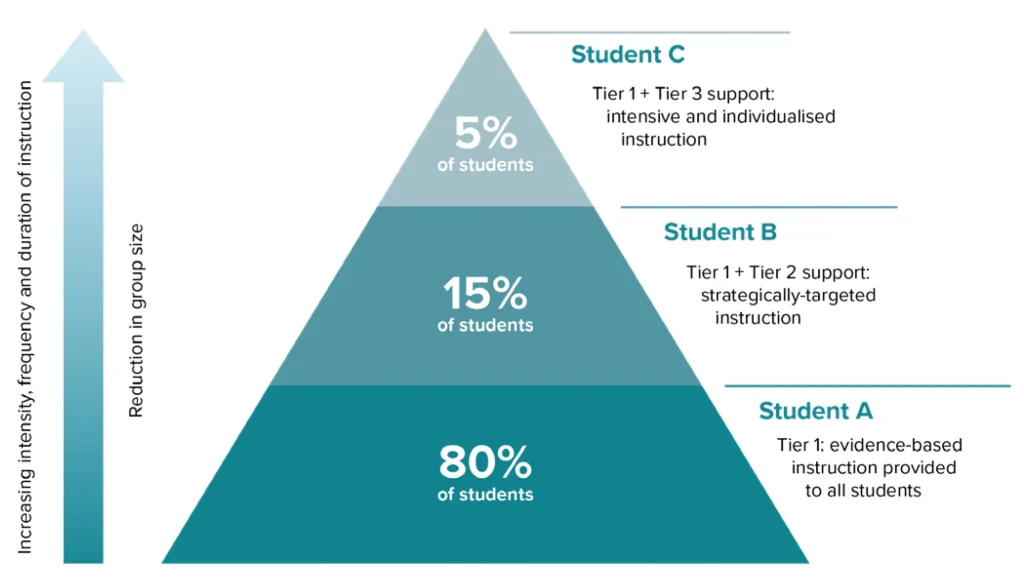

Instead, small-group instruction or tutoring should be seen as one component of a Response to Intervention (RTI)/Multi-Tiered System of Supports (MTSS) model. The systematic structure of this model means all children first receive evidence-based instruction, and struggling students are identified based on regular, reliable and valid universal screening. Schools then analyse the data and implement high-impact intervention programs to fit student need.

Small-group tutoring should fit within a multi-tiered systems of support model

The need for a systematic process for identifying and supporting struggling students emerged from the USA in light of the Individuals with Disabilities Education Act (IDEA) of 2004. Frustrated with the ‘wait to fail’ approach to identifying students with Specific Learning Disorders such as dyslexia, educational psychologists moved towards an approach whereby all students were to be provided with the very highest quality of evidence-based instruction at increasing intensity. Students’ ‘Responsiveness to Intervention’ was monitored to establish if observed learning difficulties were due to problems with instruction or neurological causes. In this way, struggling students received instructional supports as they were needed rather than contingent on a diagnostic label.[20] This was encapsulated as a three-tiered approach called ‘Response to Intervention’.

More recently, further research has resulted in the broadening of this three-tiered approach to encompass academic, behavioural and social-emotional outcomes – Multi-Tiered System of Supports or MTSS. In MTSS, data is systematically collected and used to determine whether students are responding to existing instruction and whether that instruction needs to be intensified through supplementary ‘Tier 2’ and perhaps also further intensified in individualised ‘Tier 3’ instruction.[21] MTSS has been recognised as the preferred framework for supporting all students to receive the level of support they require to succeed, although controversies remain about the extent of difference between RTI and MTSS and which is more appropriate.[22] This paper will use the term MTSS and focus on its academic component. MTSS is the term selected by AERO and utilised in the Better and Fairer review, and is therefore more likely to be the common language in Australia going forward.

Nevertheless, both Response to Intervention (RTI) and Multi-Tiered System of Supports (MTSS) refer to a systematic application of teaching, assessment, and provision of additional learning support in line with student needs. Tutoring is one way learning support can be provided. AERO identified four key principles of application for MTSS:[23]

- Using proven teaching methods for all students

- Regular testing of all students to identify gaps in learning

- Delivering frequent small group or 1:1 interventions with a focus on these learning gaps

- Continuous data-based tracking of student progress to ensure interventions delivered real gains.

Figure 5: Representation of a Multi-Tiered System of Supports. Source: Australian Education Research Organisation

Small-group tutoring, therefore, could be embedded as part of Tier 2 levels of support. De Bruin et al. provide further elaboration on how the model works:[24]

Tier 2 instruction commonly involves targeted, small-group intervention with ongoing monitoring of progress (Barrio et al. 2015; Berkeley et al. 2009). Tier 2 is time-limited, has clear goals and entry and exit criteria that indicate when students will no longer need support… Within RTI and MTSS, instruction across the tiers should be aligned so that Tier 2 supplements and complements Tier 1, but does not replace it (Harn et al. 2011)… Rather, the logic of RTI and MTSS is that evidence-based instruction at higher tiers should be an intensified version of Tier 1 practice, achieved by increasing the frequency and duration of instruction and reducing the group size (Harlacher et al. 2010; Lemons et al. 2014; Powell and Stecker 2014). That is, students access a higher ‘dosage’ of quality instruction. [emphasis added]

In other words, Tier 2 intervention (of which tutoring could be a part) should be an intensification of already-strong instructional practices at Tier 1.

MTSS in the literature

Reading

Despite the promising nature of the framework, literature suggests success is contingent on something more than adopting an MTSS label. A large-scale study in 2015 by Balu et al. of 146 schools’ RTI practices for elementary school reading yielded disappointing results on average. Year 1 students assigned to Tier 2 intervention made the equivalent of a month’s less progress than those receiving ordinary classroom instruction, whereas the impact on Year 2 and 3 students was not statistically significant. In discussing the implications of these results, the authors note that for each year level in this study, a handful of schools showed positive and statistically significant impacts on student learning.[25] The study does not look ‘under the hood’ at what practices were being used, but different outcomes are likely based on specific elements of program design, such as the criteria nominated by AERO above.

Other evidence suggests instructional practices at both Tier 1 and Tier 2 levels are significant for generating positive outcomes for students. A 2020 meta-analysis by Gersten et al. examined 33 rigorous experimental and quasi-experimental studies of reading interventions within an MTSS framework revealed positive overall effects of 0.39 SD.[26] This study went deeper than Balu et al. 2015 because it focused on instructional practices and principles. The authors found every intervention addressed multiple aspects of foundational reading (phonological awareness, phonics/decoding, passage reading fluency, encoding/spelling). In addition, “virtually all” interventions included systematic, explicit instruction. In other words, the instructional content was rigorous and intensive.

A 2018 study by Coyne et al. examined the application of MTSS in four elementary schools in four US school districts in the same state.[27] In this case, schools that participated in the study were carefully identified by the state: they had to have a record of low reading achievement, a willingness to commit to systematic improvement, and be representative of high-need districts. Schools also implemented the full MTSS framework of leadership and data teams, a school-wide reading plan, universal screening and progress monitoring, and commitment to the tiered model. They received specific funding to do this.

Effects were both positive (0.39 for phonemic awareness and 0.36 for decoding) and educationally meaningful (the phonemic awareness gain was equivalent to an 18 percentile point gain and the decoding gain equivalent to a 14 percentile point gain). Results were compared to the estimated growth students would have experienced if they had only received mainstream classroom instruction.

These results are promising, but the authors note there were features specific to this case that may have enabled this success:

- Careful selection of schools;

- Use of MTSS-aligned processes for identification and progress monitoring of students;

- Scripted lessons with clear and consistent instructional routines;

- Intervention sessions four times a week of 30-40 minutes; and

- Coaches worked with educators delivering the intervention to adopt effective teaching practices (modelling, pacing, opportunities to respond, feedback).

In summary, several features of instruction at Tier 2 are associated with explicit instruction. Without this, such significant educational effects from the MTSS framework may not have occurred. In particular, Coyne et al. also note ‘eclectic’ practices of Tier 1 at participating schools, and that a total of 47% of students were identified for MTSS participation (schools were provided with resourcing to meet this case load) and that this case load may have been smaller had there been “a more aligned and powerful approach to Tier 1 [instruction]” that enabled more students in the first instance to meet year level expectations. Had this been the case, it would have resulted in the program being less resource-intensive.

Another argument for considering whole-class approaches first comes from a 2021 study by Neitzel et al. This meta-analysis of reading interventions found an overall effect size for tutoring-based interventions of 0.26. The study notes that there were too small a number of studies of class-wide interventions or multi-tiered interventions (where Tier 1 and Tier 2 were incorporated or aligned) for the overall effects to be statistically significant, but these approaches yielded effect sizes of 0.27 for multi-tiered approaches and 0.31 for class-wide approaches. This makes aligned multi-tiered approaches and class-wide approaches competitive with tutoring when it came to student outcomes, and much more cost-effective than tutoring overall (0.26). The authors conclude these approaches “obtained outcomes for struggling readers as large as those found for all forms of tutoring, on average, and benefited many more students.”[28]

Mathematics

MTSS approaches to mathematics are less common than those in reading, at least in the United States.[29] One program used as a Tier 2 intervention for maths is ROOTS, an American program intended for Kindergarten (Foundation) students in small groups to help develop whole number skills. ROOTS has been evaluated in a number of different studies testing different features of implementation. Some studies aimed to identify the optimal group size for students in intervention, including group size implications for students further behind. The findings are summarised below:

- Clarke et al. 2017 found students receiving ROOTS made more progress than control groups but this progress did not differ significantly between a group size of two students and a group size of five students.[30]

- Clarke et al. 2020 did the same again but looked at whether students with lower initial skill required the smaller group size to make progress. The study found the smaller group size did not benefit the weaker students any more than the larger group size.[31]

Another study of ROOTS examines its impact where there is an evidence-based Tier 1 core instruction program (Early Learning in Mathematics) for all students. Clarke et al. 2022 reports a null finding of ROOTS intervention in this study. The authors comment the null findings may be due to the relatively more advantaged demographics of the student group and their higher level of overall skill. The authors conclude “Taken together, these results suggest that in educational contexts with lower base rates of risk, when strong, explicit, and systematic core math instruction is in place, ROOTS may have less of an impact on the outcomes of at-risk learners, regardless of their initial math skill.”

To summarise, Clark et al. 2017 and 2020 suggest the less resource-intensive larger small group size of five can be implemented, even for the neediest students, without sacrificing academic outcomes — provided the intervention is of sufficient rigour and intensity. On the other hand, Clark 2022 suggests that when Tier 1 instruction is strong, the effects of intervention can be ‘washed out’. This shows the most efficient approach is one that starts with high quality Tier 1 and then has a rigorous and intensive Tier 2 program.

This review of MTSS literature across reading and mathematics highlights the promise of MTSS approaches with rigorous and intensive Tier 2 programs, but also highlights the complexity of proper alignment of Tier 1 and Tier 2 support. However, the question for policymakers is not whether this is a promising policy idea but whether the reality can match the expectation in the Australian context. The case of COVID-response tutoring in New South Wales and Victoria shows this is not currently the case.

3. COVID-response small-group tutoring

As a response to COVID-related school closures, thousands of schools in New South Wales and Victoria were given money to run tutoring/intervention programs, but were only advised — not mandated — as to what the program delivery should look like. Both states issued different models of program delivery guidance to participating schools.

In both states, government schools were also expected to report relevant student data back to the relevant department, and this reporting formed the basis of the evaluations and audits done in both states to determine their impact. As these are the source of the discussion that ensues, the results should be interpreted as only relating to government schools, which represented a majority of schools to deliver the program.

New South Wales

New South Wales advised its schools to use a model of delivery based on The Grattan Institute’s review of the evidence:[32]

- Groups of 2-5 students.

- Sessions of 20-50 minutes in duration.

- Occur at least three times per-week over 10-20 weeks.

- Be targeted to students’ specific needs.

Schools were responsible for several aspects of the program, including:[33]

- selecting appropriate students to receive small-group tuition.

- identifying and employing tutors.

- assisting and supervising tutors that are delivering the tuition.

- monitoring student progress and communicating with parents or guardians.

- reporting to the NSW Department on program activities and student progress.

Identification and monitoring of students: Schools were given flexibility about how they selected students for participation. Based on survey data collected for the first evaluation, the most common forms of identifying students to take part, according to principals and program coordinators, were teacher opinion/judgement (79%), department-provided check-in assessments (70%), and observations (67%). Both earlier and later evaluations noted check-in assessment scores in reading and numeracy for those receiving the program were lower on average than check-in assessment scores of non-participants, suggesting the program was targeting needy students.[34] In the evaluation for 2022, check-in assessments and class-based assessments were the most common tools used to monitor student progress.[35]

Staffing: As noted, schools selected their own staff, which were typically existing staff (63%) or known casuals (47%). The definition of ‘educator’ encompassed active, qualified teachers, retired or on leave teachers, final year teacher education students, education paraprofessionals as well as university academics and postgraduate students and was expanded in 2021 to include School Learning Support Officers (SLSOs) or non-government equivalents, and allied health professionals, considered ‘non-teacher educators’.

Initial evaluations suggested 74% were teachers and a further 15% were non-teacher educators.[36] The most recent evaluation stated 66% of educators were active teachers and a quarter were SLSOs, with most of the remainder being university students and education paraprofessionals.[37] The evaluation for 2022 stated schools found it difficult to recruit staff to deliver the program and were using educators recruited for the program to cover general absences of classroom teachers.[38]

Implementation: The first evaluation noted 83% of students were provided support through a withdrawal from a timetabled class, with 13% receiving in-class support. Only 1% of students were provided support before or after school.[39] The latest evaluation showed 81% of students overall were supported through withdrawal and 15.6% receiving in-class support. Withdrawal was more common in primary than in secondary settings.[40] A very small proportion of students used online tuition run by the Department of Education in 2022.[41]

In order to support schools with program delivery, the NSW Department of Education ran professional learning sessions, self-directed professional learning modules in addition to the material on the website. 54% of tutoring educators used the professional learning modules in 2021.[42]

Victoria

The NSW government conducted and published four evaluations in total of the ILSP, enabling a relatively clear understanding of key program features as they were enacted in schools. In contrast, the limited findings published by the Victorian government (which focused on outcomes) mean the program delivery model can only be discussed in terms of what schools were advised, and not what was done in practice.

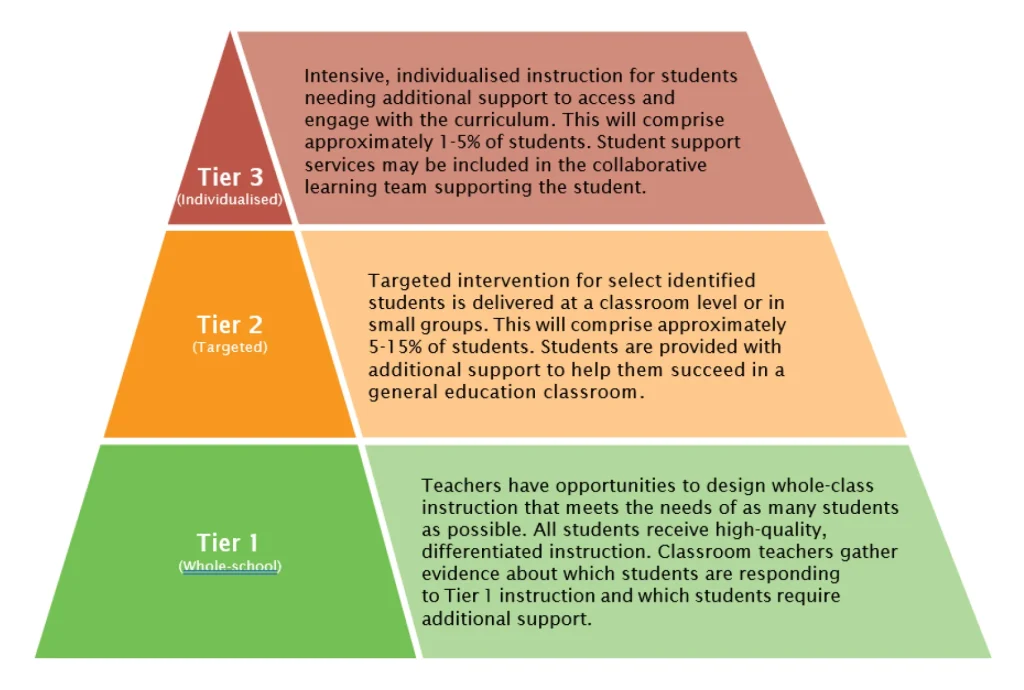

The Victorian government advised schools to consider tutoring as an example of Tier 2 intervention, in keeping with a Response to Intervention model. The below representation of RTI/MTSS is from the 2023 Tutor Practice Guide from the Victorian Department of Education (the TLI is Tier 2 support).

Figure 6: Response to Intervention model promoted for the Tutor Learning Initiative © State of Victoria (Department of Education) 2023

The use of the RTI/MTSS framework differed from NSW. However, the above advice differs from traditional models in one key way: it permits schools to use ‘classroom level’ assistance as a form of Tier 2 intervention,[43] whereas Tier 2 support is intended to be additional to high-quality Tier 1 instruction.

The Department provided some indications of what practices are more effective. The What Works guide notes that 16% of principals believed explicit teaching had the greatest impact on student growth in tutoring. It also notes schools preferred out-of-class/withdrawal or hybrid tutoring due to it minimising “the impact of cognitive load” compared to in-class tutoring.[44] The Tutor Practice Guide also contains a list of practices relating to structuring sessions and using explicit teaching.[45] While there is advice about conducting tutoring in a way that aligns with evidence of best practice, it is unclear what implementation looked like in practice because this was not reported.

Identification and monitoring of students: Program guidelines indicate students should be identified on the basis of NAPLAN (if they are in the ‘needs additional support’ or ‘exempt’ categories), and those who are demonstrating low skills on the basis of other data (if absent for NAPLAN or in a non-participating year level). Schools were also encouraged to use ACER’s PAT and other tools in the Victorian Digital Assessment Library for progress monitoring of students.[46]

Staffing: Tutors must be registered with VIT (full registration or Permission to Teach), a pre-service teacher if working under supervision, a speech therapist or occupational therapist, or a retired teacher who has re-registered.[47] 72% of school leaders reported a preference for employing staff already working within the school as tutors[48] but, as schools retain responsibility for hiring tutors, it is not clear who was hired for these roles.

Implementation: 46% of primary schools used a withdrawal method, with a further 41% using a combination of in-class and withdrawal support. For secondary schools, 62% used the hybrid method, and an unstated percentage used out-of-class for Year 11 and 12 students.[49] Advice to schools from the Department of Education on supporting students with literacy and numeracy difficulties, which precedes the TLI program, advocates students being provided with in-class support during a group work phase by the classroom teacher (see Box 3).

Though it’s not clear which models were predominant, the Victorian Auditor-General’s Office report into the effectiveness of the TLI examined overall implementation effectiveness.[50] The report used the Department of Education’s own framework to see what proportion of schools utilised effective models, which included the following factors:

- Targeting: selection of students for assistance;

- Appropriateness to context: leading effective tutoring practice across the whole school;

- Appropriateness to student need: designing a tutoring model that responds to students’ learning needs and monitors their learning growth; and

- Timeliness: whether schools were able to offer tutoring by week 6 of each term.

Many of these features align with the necessary elements for success as described above, with ‘fully effective’ and ‘partially effective’ schools incorporating at least some elements of the RTI framework advocated in the Victorian government guidance. Some key findings of VAGO’s assessment of implementation are listed below:

- 96% of schools were able to deliver tutoring in a timely fashion.

- 26% of schools had fully effective practice when targeting students with a further 52% being partially effective.

- This differed by school type, with 29% of primary schools being fully effective compared to 17% and 18% respectively for secondary schools and combined schools

- 30% of schools had fully effective practice in tutoring model and dosage, with a further 50% partially effective.

- This differed by school type, with only 12% of secondary schools being fully effective in this area compared to 35% of primary schools

- Only 14% of schools effectively monitored learning growth, with a further 45% partially effective.

- This differed by school type, with 17% of primary schools being fully effective in this area and only 6% for secondary schools.

This assessment should be borne in mind when considering the report’s summary of academic impacts.

Summary of results

Perceived outcomes

In New South Wales, only the Phase 2 (covering the 2021 school year) and Phase 3 (covering the 2022 school year) evaluations reported student outcomes. In Victoria, the Auditor-General’s report focused on student academic outcomes. Data on perceived outcomes, based on surveys, were published in limited, summary form.

New South Wales

The Phase 2 evaluation showed most school staff thought small group tuition either greatly or somewhat increased student learning progress, with classroom teachers reporting the lowest levels of agreement (87%). Classroom teachers were surveyed about their perception of the non-academic outcomes for students. 83% thought student confidence had improved, reducing to 69% for student attitudes to school. Most did not perceive any impact on student homework behaviour and half perceived no change in school attendance. These were less positive than the reports of the educators who were responsible for delivering the program.

The Phase 3 evaluation showed most school staff (program coordinators, educators, principals and classroom teachers) thought “the program improved students’ learning progress and improved students’ confidence, engagement and motivation.” Classroom teachers were the least likely to agree with the statement, with 85% expressing agreement. Students were a little less positive, with 85% of primary and 75% of secondary students feeling that they were doing ‘a little’ or ‘a lot’ better at school after participating in the program.[51]

Victoria

The document “Key insights from the first year of implementation of the Tutor Learning Initiative in 2021”, derived from the unpublished Deloitte Access Economics report, stated “the majority of primary school (88%) and secondary school (75%) principals surveyed reported improvements in students’ achievement that they attributed to the TLI” and that schools also reported improvements in student engagement and teacher practice.[52]

Academic outcomes

In contrast to perceptions of the positive impact of tutoring programs, achievement data in both New South Wales and Victoria failed to confirm any measurable effect on student learning.

New South Wales

The Phase 2 evaluation concluded that with effect sizes so small, it was not possible to “reliably determine whether students who received tuition grew more than similar students who did not receive the program.” Across some year levels and domains, participating students experienced less growth than non-participants but for others there was no discernible difference. Disappointingly, it is also true that nowhere did students receiving the program achieve more growth than similar non-participating students.

The Phase 3 evaluation showed that, in reading, program participants achieved the equivalent academic growth over one year compared to like non-participating students. In numeracy, this was the same, except for participating students in Years 5 and 6 who showed slightly less growth. Overall, the evaluation says “[o]n average, student growth was the same between students who participated in the program and similar non-participants, so we cannot confidently attribute students’ growth in learning to the effect of the program alone.”

Victoria

In addition to the unpublished Deloitte consulting report, the state’s auditor-general (VAGO) released a June 2024 report which sheds more light on the academic impacts of Victoria’s TLI than the aforementioned ‘Key Insights’ document.[53] Due to the lack of detail in the Key Insights document, this section will refer exclusively to the impacts as reported by VAGO.

The VAGO report examined student outcomes as they related to the 2023 school year; long beyond the period of lockdowns and once the worst of COVID-related disruption to staffing had eased. As has been discussed in this report, VAGO concluded the benchmark for catch-up success was that “tutored students need to learn faster than they would have without tutoring and faster than their classroom peers who are not receiving tutoring”. The report examined student learning growth in Years 3-10 according to PAT Reading (PAT-R) and PAT Mathematics (PAT-M) and found:

- When comparing tutored vs untutored students with similar starting scores on both PAT-R and PAT-M, tutored students made no more progress than untutored peers.

- In many cases, tutored students made slightly less progress than untutored peers, although the educational significance of this difference is not clear.

- When focusing on disadvantaged students in particular, learning growth was the same between tutored and untutored students. Similarly, learning growth was the same between the two groups in metropolitan, regional and rural Victoria.

- Across both PAT-R and PAT-M, students in Years 3 to 6 made more progress than students in Years 7 to 10, and younger students generally made more progress than older students.

Other effects

In New South Wales, staffing guidelines were changed to expand the pool of possible personnel to act as educators, particularly SLSOs and allied health professionals. Even so, schools continued to report problems with overall staffing which impacted upon their ability to deliver the program effectively. Given the vast majority of program educators are still qualified teachers, it is plausible that the program has put pressure on staffing without necessarily bringing a benefit to students. At a time of reported teacher shortages, these costs should be a consideration. Victorian guidelines allowed a similarly broad pool to participate as tutors, but it is unclear what proportion were qualified teachers and whether that may have exacerbated shortages in other areas.

There may have been other, less quantifiable, benefits for schools in the form of teacher professionalism. Victoria’s ‘What Works’ guide for schools for the TLI notes 69% of primary principals and 75% of secondary principals reported an observable increase in collaboration between members of staff and that it created opportunities for “incidental professional development”.[54] The VAGO report noted there was no quantifiable impact on student attendance, despite the perceived benefits to student engagement as previously reported by the Victorian Department of Education.

Box 3: England’s tutoring initiative

Beginning in November 2020, the Department for Education in England invested approximately £1 billion in the National Tutoring Programme (NTP). As in Australia, this policy was conceived in direct response to help struggling students catch up following COVID pandemic-related school closures.[55]

The NTP allowed schools to select from three routes of support: tuition partners, where the government subsidised 70% of tuition provided by approved partners; academic mentors, for the most disadvantaged schools where additional staff could be hired (their salary subsidised 95%) by schools to act as full-time tutor support; and school-led tutoring, where schools could internally fund their own tutoring program (with a 75% subsidy). The latter was added in the 2021-22 school year and meant schools could deliver a program from any subject, not just English and Maths.[56]

In the study for the 2022-23 school year, 85% of schools used school-led tutoring (SLT), though this was not necessarily exclusively. Many schools that used SLT also used it with internal staff members, meaning most were qualified teachers who understood the school and curriculum context. These teachers were offered online training to support the delivery of tuition. This makes the implementation of this part of the program highly similar to the Australian cases.

The Department for Education commissioned and published several reports which evaluated the process and impacts of the NTP.[57] [58] [59] Select findings are summarised below:

Factors associated with student success:

-

-

-

- Use of school-level tutoring (rather than academic mentors or tuition partners).

- Selection of students based on curriculum-aligned assessment that identified gaps in student knowledge.

- A strong curriculum that directly addressed the core knowledge required for student success and formative assessment to monitor progress.

-

-

Perceived benefits for students:

-

-

-

- Student enthusiasm, and staff thought it improved confidence and resilience of participating students and helped them re-engage in school.

- Senior leaders, teachers and tutors perceived that the NTP had a positive impact on students’ attainment, progress and confidence and it was helping to improve outcomes across the school more broadly.

-

-

Measurable academic benefits:

-

-

-

- School and student-level results for KS2 (Year 2-6) and KS4 (Year 10-11) showed participation in SLT was associated with small improvements in Maths outcomes.

- Limited evidence for English, and only at the school level, at KS2 and KS4.

- Statistically significant results were equivalent to one month’s additional progress (Maths) or less (English) over the school year.

-

-

The evaluations note the limitations of the data to draw conclusions about impact on student achievement. However, it is worth commenting on the fact that statistically significant results were equivalent to one month’s progress or less — figures much smaller than the 4 months’ additional progress suggested by Education Endowment Foundation research (Box 2). This is yet another reason to be cautious about rapid rollout of tutoring programs at scale.

Overall, although school staff perceived some benefit from the combined investment of over $2 billion on these tutoring initiatives, the lack of evidence to indicate they were effective in their primary purpose — to raise student achievement and ‘close the gap’ – is concerning, and warrants further scrutiny before there is further scaling up of these policies nation-wide.

Barriers to implementing catch-up tutoring at scale

Thus far, tutoring at scale in both NSW and Victoria, as well as England (see Box 3) has demonstrated no educationally significant impact on student achievement from these costly programs. This is important because important studies, including the Education Endowment Foundation, have shown that tutoring can be a very effective tool to help student learning (Box 2). This paper now turns to the question of why the reality has not lived up to expectations.

As a systematic and permanent approach, MTSS represents the best evidence-based framework for embedding small-group tutoring, and the Better and Fairer review recommended catch-up tutoring embedded within a MTSS framework.[60]

One clear theme in the review of COVID tutoring program guidelines and practices used by schools is that they were not systematic in the way that MTSS requires. While Victoria used the language of RTI, many enabling factors were absent. The VAGO report found that implementation effectiveness varied across schools and school types.

The COVID tutoring case studies are useful because they serve as an indicator of what happens if tutoring is implemented at scale without sufficient guardrails and guidelines — including a pre-planned system of staggered roll-out and evaluation. In the absence of a structured approach, it is doubtful most Australian schools would be able to implement small-group tutoring as part of MTSS effectively.

Adopting the MTSS label is also not enough to guarantee success. As the AERO model and the earlier review of literature shows, there are four key ingredients required for efficient and effective MTSS implementation. If these are absent – as occurred in the large-scale COVID-era tutoring programs – then students will not benefit. Policymakers must give proper attention to the enabling factors for successful MTSS and how to develop these at scale before significant investment is made.

Evidence from various sources in addition to what can be gleaned from tutoring suggest there are genuine capacity problems within Australian education at present to establish rigorous MTSS approaches. This section will analyse four problems evident in Australian schools’ access to, or implementation of, the key ingredients for MTSS application.

- Inconsistent quality of instruction at Tier 1

- Lack of access to screening and diagnostic tools to correctly identify student need

- Lack of access to evidence-based intervention programs

- Lack of access to effective progress monitoring tools

Barrier 1: Inconsistent quality of instruction at Tier 1

Why it matters

As explored, inconsistent Tier 1 instruction (where less than 80% of students are meeting proficiency) poses three problems for MTSS implementation: students require support they wouldn’t have otherwise (instructional casualties), the resources required to assist this larger proportion of students are inefficiently allocated, and the efficacy of the intervention itself can be compromised.

For the students themselves, they have missed out on a chance to succeed the first time. Research affirms the importance of Tier 1 instruction, and de Bruin notes “Tiers 2 and 3 are not intended to compensate for an absence of consistent or quality teaching at Tier 1, but rather to address gaps in student achievement by intensifying students’ access to quality teaching and support.”[61] With adequate Tier 1 instruction, the proportion of students in Tier 2 will be smaller but students with specific learning needs will be proportionately higher than instructional casualties.

Current practice

Consistent and high-quality instruction at Tier 1 would involve explicit instruction of a well-sequenced and knowledge-focused curriculum. However, on curriculum, analysis suggests that in several key areas, the Australian Curriculum and its variants do not adequately support teachers to make wise and informed decisions about sequencing of knowledge to support student learning.[62]

Survey evidence from overseas suggests teachers often rely on resources that are cobbled together to implement curriculum, which at best suggests varying quality.[63] [64] The Victorian Curriculum and Assessment Authority (VCAA) has noted school-based curriculum implementation “has not always been accompanied by a sufficient level of advice and support to schools to enable the development of system-wide high-quality teaching and learning programs.”[65]

There are examples of schools which have successfully switched to high-impact, evidence-based teaching methods for all students (Tier 1) and seen a direct effect on intervention caseload. For example, one study of the Canberra-Goulburn Catholic Archdiocese’s transition towards evidence-based, explicit whole class instruction practices for reading examined 43 schools. The study reported there was a significant reduction in the proportion of students achieving at a level indicative of needing intervention, as measured by word reading results.[66]

Survey data on teachers’ use of evidence in teaching and their use of instructional practices supported by evidence is mixed. Both AERO and Monash University’s Q Project have done surveys about teachers’ use of education research. While they show a moderate proportion of teachers state they use research and evidence in education practice, both sets of surveys use a broad definition of ‘research’ and ‘evidence’, so affirmative results are not in themselves a guarantee of good practice. More detail about the findings of these surveys is available in Box 4.

Box 4: Evidence-based practices in Australian schools.

The Q Project showed that the majority of teachers relied on leaders and colleagues as sources of research evidence (76.8% and 74.1% respectively). Furthermore, the surveys categorised 40% of teachers as ‘passive followers’ and 7.6% as ‘ambivalent sharers’ who “rarely use research in practice, if at all, and value their own experience and knowledge over research”. A further 12.7% of educators (‘collegial pragmatists’) were deemed believers in research but at times believing more in their own experience and not often using research.[67]

The AERO survey found 67% of respondents report using forms of teacher-generated evidence ‘often’ or ‘very often’, but this falls to 41% for research evidence. In addition, only 40% of teachers reported they ‘often’ or ‘very often’ consulted academic research to improve their knowledge about whether an instructional practice was effective, increasing to 60% for leaders.[68] 38% of teachers reported they consulted a document that summarises effective instructional practices when planning a lesson or unit, increasing to 50% for leaders. While other collation of international survey data by AERO suggests a reasonably high proportion of Australian teachers use evidence-based practices, the report notes these data may be an over-estimation of their frequency.[69]

In addition, these questions do not adequately capture the specific set of practices that align with explicit instruction.

Taken together, it is reasonable to conclude that, at best, teachers’ use of explicit instruction to deliver learning based on a well-sequenced and knowledge-focused curriculum is likely to be variable. Evidence-based, high impact instruction at Tier 1 enables more effective delivery of Tier 2, small-group tutoring support; which is, by definition, an intensification of Tier 1 instruction. Therefore, it is evident that sustainable and effective small-group tutoring depends on the quality of curriculum and instruction at Tier 1, and more consistency in these areas is crucial to successful implementation of MTSS at scale.

This is a possible explanation for why the large-scale tutoring case studies discussed elsewhere in this paper have not yielded overall benefits. Where tutoring represented an intensification of high-quality instructional practices, it may have been more successful. Where it represented an additional dosage of mixed-quality instructional practices, it is unlikely it yielded the sort of benefits in keeping with the broader literature.

Barrier 2: Lack of access to screening and diagnostic tools to correctly identify student need

Why it matters

Within the MTSS framework, screening and assessment is required to monitor all students and identify those in need of further support. Screening tools assess student capacity on measures that are predictive of success, and students flagged by screening should undergo further diagnostic assessment to understand what form of intervention is required.

Success is contingent on schools having access to both sets of tools, knowledge of which is required, and data skills to use the results. If any of these is lacking, there is a potential for inaccurate identification of students for intervention. A recent review of MTSS evidence commissioned by AERO concluded that brief and basic assessments linked to key elements of curriculum are appropriate for use to identify students in need of support.[70]

Current practice

Information on current practice is limited. A 2017 Australian government review recommended the adoption of the Year 1 Phonics Screening Check to assist with early identification of decoding-related difficulties, and the development of an equivalent numeracy measure to assist with early identification of problems in numeracy,[71] after which the Year 1 Number Check was developed. Of the two, only the Year 1 Phonics Screening Check has since had significant uptake; it is mandated in several Australian states.[72]

Beyond this, tools recommended by policymakers and used within schools are often not fit for purpose. Most states and territories advise or mandate that schools use interview-based tools (such as the English Online Interview in Victoria or the Maths Assessment Interview in many jurisdictions) which require significant resources to deliver and provide limited guidance on priorities for instruction.

The AERO/ACER survey about the use of interventions for struggling learners in early secondary asked educators how students were identified to receive learning interventions. The survey found achievement tests like NAPLAN (76% for literacy, 75% for numeracy) and PAT (60% for literacy, 70% for numeracy) a close second, followed by school-based assessments or teacher judgement (54% for literacy, 67% for numeracy).[73] A third of school staff said they found it difficult to identify students who are struggling.[74]

While a useful health check for education systems, NAPLAN and other achievement testing (such as PAT), as recommended by NSW’s ILSP and Victoria’s TLI, do not provide the diagnostic information required to make informed judgements about student progress. Similarly, further information about the design of NSW’s Check-in assessments and Victoria’s Digital Assessment Library (also advised to be used for identifying students for tutoring) is not publicly available; but if these are achievement tests rather than screening tools, the same problem occurs.

In addition to the concerns about the type of tool, frequency is also a consideration. MTSS models suggest the use of broad screening tools at a minimum of two and commonly three times per year to identify students falling behind grade level expectations.[75] [76] Such students are then the subject of further diagnostic assessment to ascertain the nature of their academic difficulties so that appropriate instructional approaches can be chosen. It is unclear how many schools would follow such practice already.

Barrier 3: Lack of access to evidence-based intervention programs

Why it matters

Once students have been identified for intervention, an appropriate program must be chosen which will assist them to progress faster than their peers, in order to close the achievement gap and reach grade-level performance. Students who have previously achieved slower progress cannot ‘catch up’ unless their progress is significantly accelerated. This requires a rigorous, evidence-based intervention which is higher in intensity than Tier 1 instruction, to give students sufficient opportunities to practise and thus close the gap.

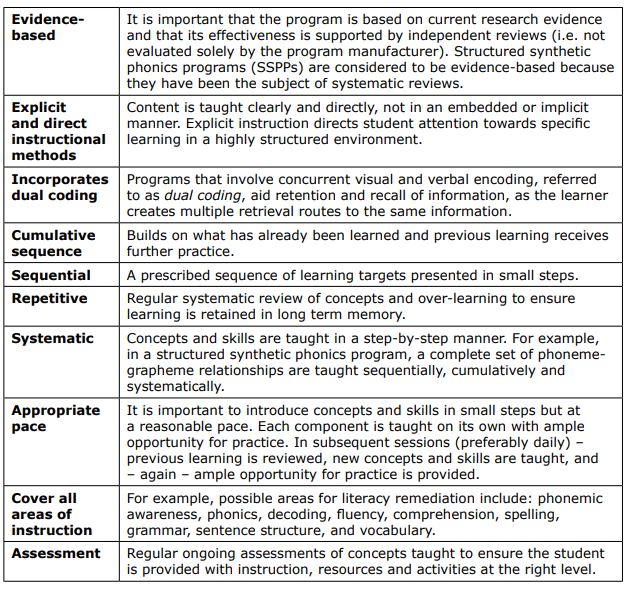

Interventions can be said to be based on evidence if they use instructional principles such as explicit instruction which are supported by broader literature (‘research-based’), or if they have been subject to research trials and high-quality studies that show them to be effective (‘evidence-based’). Table 1, below, contains a list of principles for intervention that schools should use to guide their practice.

Table 1: Principles of intervention, reproduced from AUSPELD’s Understanding Learning Difficulties: A Practical Guide[77]

Current practice

Little is known about interventions used in Australian schools. One evaluation for NSW’s tutoring program suggested about a third of government schools used a third-party program to form at least part of tutoring delivery. The evaluation notes there is a “large variety… and the overwhelming majority were third party literacy programs”, of which the most popular were programs from the MultiLit suite.[78] Similarly, the AERO/ACER survey of older struggling learners found half of schools used a third-party literacy intervention and about a third (35%) used a third-party numeracy intervention.[79] The same survey indicated two in five (41%) of respondents lacked confidence in the approach their school takes to support students in literacy and this increased to almost half (47%) for numeracy.[80]

Unclear or inconsistent system-level guidance about appropriate interventions in the past can have lasting effects. Reading Recovery was the Victorian Department of Education’s recommended literacy intervention program until 2014, with varying sources suggesting anywhere between a quarter and half of Victorian government primary schools used the program around that time[81] [82] and two-thirds of Victorian Catholic schools as of 2016.[83]

The evidence for Reading Recovery has been under challenge for a long time, and more recent work suggests studies which attested to its effectiveness were poorly designed and impacts were short-lived.[84] Policy took a while to catch up, but a brief online search suggests Reading Recovery and other programs based on similar instructional principles (such as Fountas and Pinnell’s Leveled Literacy Intervention) still operate in some Victorian schools.

A review of literacy interventions in Australian schools found some programs in use with a high-quality evidence base, but also some that lacked evidence and/or alignment with principles of high-quality literacy instruction:

Given the (often enormous) expense of purchasing the commercial materials, as well as staffing the intervention programs, these interventions do not represent an effective use of teaching time, student support or school resources. Those programs with stronger and clearer evidence represent alternatives that can enable schools to use their resources more efficiently and provide more effective support to students.[85]

It is difficult to draw firm conclusions about how many schools are using published programs for literacy and numeracy intervention and, of these, which are evidence-based and implemented with fidelity. However, the limited data on this topic suggests there is a significant proportion of schools who are using no program at all, as well as others who may use a program lacking a sound evidence base.

When schools choose off-the-shelf, published programs, these programs need to have demonstrated efficacy in raising student achievement in the relevant area of need and/or apply evidence-based principles of instruction. Left to make the decision independently, schools are often using no program at all — which means interventions may lack the required intensity — or may use programs that are not supported by evidence and the instructional practices of which have been discredited.[86]

Another area of concern is the implementation of the intervention. Though schools will be encouraged by tutoring funding in New South Wales and Victoria to implement Tier 2 assistance through withdrawal from classes, policy and guidance can still provide mixed messages about what implementation decisions are likely to have the highest impact (see Box 5).

Barrier 4: Lack of access to effective progress monitoring tools

Why it matters

Once evidence-based interventions have been chosen, it is necessary to monitor the impact of those interventions. This is because there is a high financial cost of delivering tutoring programs, and an educational cost to students of missing out on something when they are asked to participate in tutoring or an intervention. It’s not enough to simply say students participating in tutoring experienced ‘growth’ in outcomes, but students in this ‘treatment’ group experienced more growth than similar students who did not receive the intervention. This growth should be indicative of a reasonable educational effect. In other words, it has to be demonstrated that what the student is being asked to do is generating better outcomes relative to the business-as-usual case.

When progress is found to be suboptimal, educators can make changes to the way intervention is delivered, or to the type of intervention delivered to better meet students’ needs. Progress monitoring tools must therefore be a reliable measure of overall growth in the targeted skill (e.g. reading), whilst being quick to administer and sensitive to growth when used on a regular basis (e.g. fortnightly). [87] [88] [89]

Current practice

Information on how Australian schools monitor the progress of students receiving some form of intervention is sorely lacking. The only piece of data on this topic is from the first evaluation of NSW’s ILSP, which asked government school principals and educators “How will you monitor student progress?” The most common responses were observations, teacher opinion/judgement, and literacy and numeracy progressions data. It is impossible to say how representative this survey of staff (n=750 principals, n=336 educators) in one state is of broader Australian practice, but these are not adequate progress-monitoring tools.

Tutoring will require capacity improvements in order to be scalable

The preceding analysis has shown that regardless of recent enthusiasm for application of tutoring as part of an MTSS framework, capacity — in rigorous curriculum and teaching, screening, programs, progress monitoring — is not consistently present in order for these approaches to have the benefit attached to what will undoubtedly be a considerable price tag. Box 5, which describes some of the advice provided to schools by the Victorian Department of Education, provides a summary of the ad-hoc approach advocated by some policymakers.

Box 5: Case study guidance for literacy and numeracy intervention in Victoria

The RTI model used in guidance for Victoria’s TLI and other advice about students with literacy and numeracy difficulties states that Tier 2 support can be delivered at ‘a classroom level’ or ‘in small groups’. While it is possible to deliver effective Tier 2 support within a classroom context, if support is intensive and carefully positioned within a lesson, this is not the advice given to schools. The guides issued by the Victorian Department of Education for students with literacy and numeracy difficulties[90] both use case studies which — despite resembling RTI/MTSS — do not meet the criteria for effective implementation due to not being systematic or rigorous.

Literacy: The case study ‘Sarah’ is a Year 8 student whose need of additional assistance is determined by teacher judgement rather than universal screening. The nature of Sarah’s difficulty (phonemic awareness and decoding) is again determined by teacher judgement. Once goals are set, Sarah receives this additional instruction in phonics with her teacher while the rest of her class are working in other groups — the secondary-trained English teacher is expected to have knowledge of how to remediate decoding difficulties in a Year 8 student. In lieu of objective progress monitoring tools, progress is determined by teacher judgement.

Numeracy: The case study ‘Mason’ is a Year 5 student whose end of year report indicates he’s a year behind. His teacher judges that he struggles with place value and uses his fingers to count. The teacher then spends a further two months monitoring Mason’s progress including through assessments (no specific assessments are named) before the teacher and numeracy specialist set learning goals around mathematical notation and vocabulary and number representation. The teacher incorporates some teaching into Tier 1 and provides Mason with assistance with concrete representations such as wooden blocks and cutting and folding while other students are also doing group work. Progress is monitored through dynamic assessment and ‘mini learning progressions’. Whether Mason is progressing overall is determined by teacher judgement.

These case studies are further evidence of the lack of systematic and rigorous approaches to MTSS in schools in Australia. In the above, guidance, adequate screening, diagnostic and progress monitoring tools are absent, and it is simply implausible that a teacher could provide in-class support of the quality and intensity required for ‘Mason’ and ‘Sarah’ to make adequate progress without disadvantaging the learning of other students in their class.

In implementing the rapid response that post-COVID schooling circumstances required, the TLI and ILSP approaches lacked the planning and rigour that would have enabled them to achieve the desired outcomes for students. However, although COVID created an urgency in addressing the issue, the student achievement problem is very real and ongoing. Scaled support for student achievement is needed and requires careful planning.

5. Policy implications

The current evidence suggests that mere ‘tutoring’ has failed to achieve the levels of promise suggested by large-scale studies and meta-analyses of other programs. In order to improve capacity within Australian education to deliver small-group tutoring as part of an MTSS framework, and therefore in a way more likely to result in meaningful impact on students, policymakers should provide concrete supports in key areas.

Firstly, improve Tier 1 quality and consistency. Evidence from achievement data of Australian students, showing roughly a third of students across year levels and domains lack proficiency, suggests “[u]sing proven teaching methods for all students” at Tier 1 is by no means guaranteed. Investing in whole class/Tier 1 strategies will reduce the ‘caseload’ of students in need of further support, yielding benefits not only for efficient use of resources.

Secondly, provide valid and reliable identification strategies. As the AERO model shows, students should be identified for further support not on the basis of a diagnosis or teacher judgement, but because some form of valid and reliable educational assessment has been used to make this determination.

Thirdly, intensive Tier 2 programs are required to give students the ability to learn and master concepts faster than they would have otherwise. This is the only way to ensure students are able to keep up or catch up to their peers. This is especially the case when involvement in intervention will typically involve students missing out on some amount of class time. Therefore, whether the results of the intervention outweigh the cost of resources/opportunity cost for students is a key consideration in weighing up the evidence.

Finally, effective progress monitoring must be implemented for students in intervention for two purposes: to inform decision-making for that student (whether they can come out of Tier 2, require more intensive support at Tier 3 or should continue) as well as inform future instruction for the whole class level. This is necessary to ensure MTSS remains a dynamic and responsive framework.

Take a preventative approach by focusing on quality and consistency of Tier 1 instruction

Given the current reality, it is wiser to take a preventative approach where the focus of policy becomes the quality of instruction for all students in the whole-class context and lowering rates of ‘instructional casualties’. Researchers in the area of MTSS have drawn an analogy with medicine in which Tier 1 instruction is equivalent to ‘prevention’ in medicine’s primary care context — more intensive and expensive forms of care are still required to keep people healthy, but they function best when prevention is well-supported.[91]

De Bruin et al., in their review of evidence for an Australian context, argue “when a substantially higher proportion of students are underachieving, Tier 1 should be adjusted to build targeted support into the general education classroom, for example by increasing the duration and frequency of explicit teaching of the foundational skills.”[92] As discussed earlier, NAPLAN data suggests this is the case in parts of Australia.

The job of policymakers is to guide schools on how to do this effectively. This means narrowing the spread of different educational approaches across schools (Tier 1 instruction), by focusing on curriculum (what is taught and how it is organised) and pedagogy (how it is taught). There are several possible actions:

- Review the Australian curriculum and its state-based adaptations (such as the Victorian Curriculum and NSW syllabus) to ensure sufficient depth and detail to enable effective sequencing of teaching

- Promote organisations such as Ochre which operationalise the curriculum (currently English and Maths) into usable scopes and sequences, lesson plans and lesson demonstrations, text recommendations and revision and review

- Refocus pedagogical techniques or instructional models advocated at a system level (mostly by state governments) to emphasise high-quality explicit instruction

- Provide professional development in implementing evidence-based instructional approaches across the curriculum.

A variation of the traditional three-tiered model includes a ‘Tier 1.5’, that sits between Tier 1 and 2: a class-wide intervention for when screening data suggests a large amount of educational risk in a classroom or across a year level.[93] Class-wide interventions can involve the following features:

- Fluency-based peer tutoring based on materials students have already learned.

- High opportunities for response and error correction within carefully selected pairings.

- Students take turns practising then complete timed intervals of task completion to improve performance.

- Processes heavily structured and materials gradually increase in difficulty.

Intensification of instruction, regardless of ‘tier’, can also be achieved in other ways. Greater use of explicit instruction principles — providing simple explanations, modelling solutions, backwards fading/reduction of scaffolding, practice opportunities — as well as higher dosage through increased opportunities to respond, are factors of intensification that can be used at the whole-class level as well.[94] Opportunities to respond (OTRs) are factors of ordinary classroom practice and intensifying quality and/or rate of OTRs is a comparatively resource-efficient way of increasing instructional intensity.[95]

In keeping with the analogy of medicine, these sorts of changes, as they seek to shift educational outcomes at the whole-class level without creating vast new programs requiring additional staff, represent a cost-effective way to meet the educational needs of a higher proportion of students.

Recommendation: Invest in curriculum support and professional development resources to enable more schools to prevent students from falling behind with a more consistent and evidence-based approach to Tier 1 instruction.

Target assistance by improving access to screening and diagnostic tools

Some progress has been made with widespread — but not yet universal — adoption of the Year 1 Phonics Screening Check and recognition of the need for an equivalent number screening tool. Despite the range and variety of assessment tools, most are interviews (in early years) or achievement tests (for all year levels) and are not fit for purpose as screening. While the gradual adoption of a consistent approach to Year 1 screening is a good start, effective MTSS require screening to be regular part of ongoing practice in every year level and in both reading and mathematics domains.

Therefore, policymakers should ensure appropriate tools are available, or direct schools to existing resources. If existing resources are used, then cut scores and decision rules that are tailored to the Australian context must also be part of this work. Both the access to relevant tools and the ability to use the data are critical to improving schools’ decision-making capabilities.

For literacy, one tool used in many schools is the University of Oregon’s DIBELS (Dynamic Indicators of Basic Early Literacy Skills) which, despite being American, has been adapted for Australian spelling and vocabulary and can be used from Foundation to Year 8. For older age groups, De Bruin et al. recommended a test of oral reading fluency for students entering Year 7 as a universal screening tool, with flagged students then sitting additional tests as appropriate on phonemic awareness, decoding and vocabulary.[96]

While there are a range of well-supported options for literacy difficulties, evidence for mathematics and numeracy is much thinner. The US’s National Centre for Intensive Intervention (NCII) also evaluates the screening tools on offer from different third-party providers according to the strength of evidence as well as their usability for screening at different points in the year (three times a year being the norm).[97] Accordingly, Australian policymakers should commission studies of the best-supported tools and examine whether it is feasible to adapt these to align with the expectations of the Australian curriculum.